数据集

定期补充经典的数据集,喜欢的话帮我点个star哈

分类数据集

猫狗数据集

链接:https://pan.baidu.com/s/1hESO4OI_i0FjmHnkbqt-Hw 提取码:czla

Imagenette数据集

链接:https://pan.baidu.com/s/1D8ECW0G-C4mXpC7h6kYsMA 提取码:a45w

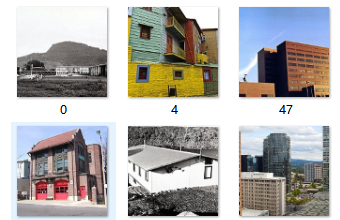

自然场景分类数据集

链接:https://pan.baidu.com/s/1YudPaWyzd0NUloePDpW8oA 提取码:ipjp

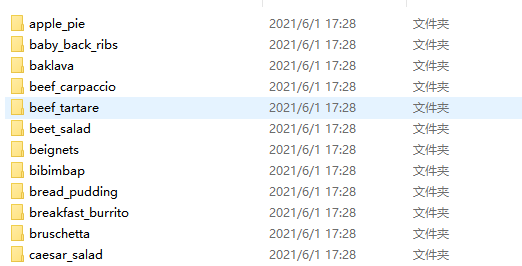

Food-101数据集

链接:https://pan.baidu.com/s/1iouChBMnfSg63qC2u7PU_g 提取码:396f

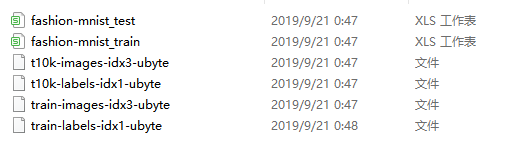

Fashion MNIST数据集

链接:https://pan.baidu.com/s/1rOXZ9IANyaIopsQr9gDpmQ 提取码:rnf3

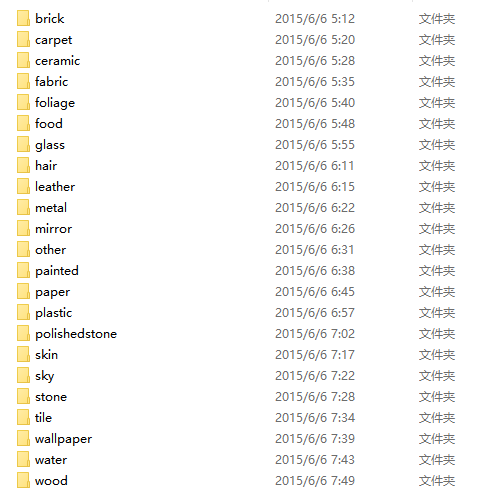

MINC-2500数据集

链接:https://pan.baidu.com/s/1Tjhb3hEClFAPWUz-0gfZRQ 提取码:qtsa

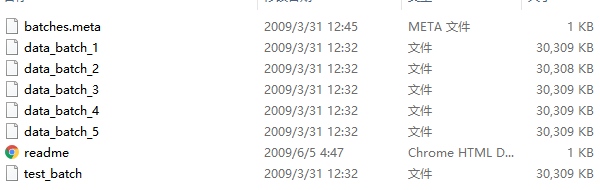

CIFAR-10数据集

链接:https://pan.baidu.com/s/15LQPvcW0EkEEjN_2Lu2T3g 提取码:956t

论文

3D AffordanceNet: A Benchmark for Visual Object Affordance Understanding

The ability to understand the ways to interact with objects from visual cues, a.k.a. visual affordance, is essential to vision-guided robotic research. This involves categorizing, segmenting and reasoning of visual affordance. Relevant studies in 2D and 2.5D image domains have been made previously, however, a truly functional understanding of object affordance requires learning and prediction in the 3D physical domain, which is still absent in the community. In this work, we present a 3D AffordanceNet dataset, a benchmark of 23k shapes from 23 semantic object categories, annotated with 18 visual affordance categories. Based on this dataset, we provide three benchmarking tasks for evaluating visual affordance understanding, including full-shape, partial-view and rotation-invariant affordance estimations. Three state-of-the-art point cloud deep learning networks are evaluated on all tasks. In addition we also investigate a semi-supervised learning setup to explore the possibility to benefit from unlabeled data. Comprehensive results on our contributed dataset show the promise of visual affordance understanding as a valuable yet challenging benchmark.

ACTION-Net: Multipath Excitation for Action Recognition

Spatial-temporal, channel-wise, and motion patterns are three complementary and crucial types of information for video action recognition. Conventional 2D CNNs are computationally cheap but cannot catch temporal relationships; 3D CNNs can achieve good performance but are computationally intensive. In this work, we tackle this dilemma by designing a generic and effective module that can be embedded into 2D CNNs. To this end, we propose a spAtiotemporal, Channel and moTion excitatION (ACTION) module consisting of three paths: Spatio-Temporal Excitation (STE) path, Channel Excitation (CE) path, and Motion Excitation (ME) path. The STE path employs one channel 3D convolution to characterize spatio-temporal representation. The CE path adaptively recalibrates channel-wise feature responses by explicitly modeling interdependencies between channels in terms of the temporal aspect. The ME path calculates feature-level temporal differences, which is then utilized to excite motion-sensitive channels. We equip 2D CNNs with the proposed ACTION module to form a simple yet effective ACTION-Net with very limited extra computational cost. ACTION-Net is demonstrated by consistently outperforming 2D CNN counterparts on three backbones (i.e., ResNet-50, MobileNet V2 and BNInception) employing three datasets (i.e., Something-Something V2, Jester, and EgoGesture). Codes are available at https://github.com/V-Sense/ACTION-Net.

A connectomic study of a petascale fragment of human cerebral cortex

We acquired a rapidly preserved human surgical sample from the temporal lobe of the cerebral cortex. We stained a 1 mm3 volume with heavy metals, embedded it in resin, cut more than 5000 slices at ~30 nm and imaged these sections using a high-speed multibeam scanning electron microscope. We used computational methods to render the three-dimensional structure of 50,000 cells, hundreds of millions of neurites and 130 million synaptic connections. The 1.4 petabyte electron microscopy volume, the segmented cells, cell parts, blood vessels, myelin, inhibitory and excitatory synapses, and 100 manually proofread cells are available to peruse online. Despite the incompleteness of the automated segmentation caused by split and merge errors, many interesting features were evident. Glia outnumbered neurons 2:1 and oligodendrocytes were the most common cell type in the volume. The E:I balance of neurons was 69:31%, as was the ratio of excitatory versus inhibitory synapses in the volume. The E:I ratio of synapses was significantly higher on pyramidal neurons than inhibitory interneurons. We found that deep layer excitatory cell types can be classified into subsets based on structural and connectivity differences, that chandelier interneurons not only innervate excitatory neuron initial segments as previously described, but also each other’s initial segments, and that among the thousands of weak connections established on each neuron, there exist rarer highly powerful axonal inputs that establish multi-synaptic contacts (up to ~20 synapses) with target neurons. Our analysis indicates that these strong inputs are specific, and allow small numbers of axons to have an outsized role in the activity of some of their postsynaptic partners.

Balance Control of a Novel Wheel-legged Robot: Design and Experiments

This paper presents a balance control technique for a novel wheel-legged robot. We first derive a dynamic model of the robot and then apply a linear feedback controller based on output regulation and linear quadratic regulator (LQR) methods to maintain the standing of the robot on the ground without moving backward and forward mightily. To take into account nonlinearities of the model and obtain a large domain of stability, a nonlinear controller based on the interconnection and damping assignment - passivity-based control (IDA-PBC) method is exploited to control the robot in more general scenarios. Physical experiments are performed with various control tasks. Experimental results demonstrate that the proposed linear output regulator can maintain the standing of the robot, while the proposed nonlinear controller can balance the robot under an initial starting angle far away from the equilibrium point, or under a changing robot height.

Very Deep Convolutional Networks for Large-Scale Image Recognition

In this work we investigate the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting. Our main contribution is a thorough evaluation of networks of increasing depth using an architecture with very small (3x3) convolution filters, which shows that a significant improvement on the prior-art configurations can be achieved by pushing the depth to 16-19 weight layers. These findings were the basis of our ImageNet Challenge 2014 submission, where our team secured the first and the second places in the localisation and classification tracks respectively. We also show that our representations generalise well to other datasets, where they achieve state-of-the-art results. We have made our two best-performing ConvNet models publicly available to facilitate further research on the use of deep visual representations in computer vision.

Deep Residual Learning for Image Recognition

Deeper neural networks are more difficult to train. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth. On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers---8x deeper than VGG nets but still having lower complexity. An ensemble of these residual nets achieves 3.57% error on the ImageNet test set. This result won the 1st place on the ILSVRC 2015 classification task. We also present analysis on CIFAR-10 with 100 and 1000 layers. The depth of representations is of central importance for many visual recognition tasks. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset. Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.

Going Deeper with Convolutions

We propose a deep convolutional neural network architecture codenamed "Inception", which was responsible for setting the new state of the art for classification and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC 2014). The main hallmark of this architecture is the improved utilization of the computing resources inside the network. This was achieved by a carefully crafted design that allows for increasing the depth and width of the network while keeping the computational budget constant. To optimize quality, the architectural decisions were based on the Hebbian principle and the intuition of multi-scale processing. One particular incarnation used in our submission for ILSVRC 2014 is called GoogLeNet, a 22 layers deep network, the quality of which is assessed in the context of classification and detection.

Densely Connected Convolutional Networks

Recent work has shown that convolutional networks can be substantially deeper, more accurate, and efficient to train if they contain shorter connections between layers close to the input and those close to the output. In this paper, we embrace this observation and introduce the Dense Convolutional Network (DenseNet), which connects each layer to every other layer in a feed-forward fashion. Whereas traditional convolutional networks with L layers have L connections - one between each layer and its subsequent layer - our network has L(L+1)/2 direct connections. For each layer, the feature-maps of all preceding layers are used as inputs, and its own feature-maps are used as inputs into all subsequent layers. DenseNets have several compelling advantages: they alleviate the vanishing-gradient problem, strengthen feature propagation, encourage feature reuse, and substantially reduce the number of parameters. We evaluate our proposed architecture on four highly competitive object recognition benchmark tasks (CIFAR-10, CIFAR-100, SVHN, and ImageNet). DenseNets obtain significant improvements over the state-of-the-art on most of them, whilst requiring less computation to achieve high performance. Code and pre-trained models are available at this https URL .

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

We present a class of efficient models called MobileNets for mobile and embedded vision applications. MobileNets are based on a streamlined architecture that uses depth-wise separable convolutions to build light weight deep neural networks. We introduce two simple global hyper-parameters that efficiently trade off between latency and accuracy. These hyper-parameters allow the model builder to choose the right sized model for their application based on the constraints of the problem. We present extensive experiments on resource and accuracy tradeoffs and show strong performance compared to other popular models on ImageNet classification. We then demonstrate the effectiveness of MobileNets across a wide range of applications and use cases including object detection, finegrain classification, face attributes and large scale geo-localization.

ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

We introduce an extremely computation-efficient CNN architecture named ShuffleNet, which is designed specially for mobile devices with very limited computing power (e.g., 10-150 MFLOPs). The new architecture utilizes two new operations, pointwise group convolution and channel shuffle, to greatly reduce computation cost while maintaining accuracy. Experiments on ImageNet classification and MS COCO object detection demonstrate the superior performance of ShuffleNet over other structures, e.g. lower top-1 error (absolute 7.8%) than recent MobileNet on ImageNet classification task, under the computation budget of 40 MFLOPs. On an ARM-based mobile device, ShuffleNet achieves ~13x actual speedup over AlexNet while maintaining comparable accuracy.