Update to Pytorch 1.0

Showing

densenet.py

0 → 100644

dsp.py

0 → 100644

104.7 KB

23.2 KB

image/spectrum_N1.png

已删除

100644 → 0

24.3 KB

image/spectrum_N2.png

已删除

100644 → 0

24.3 KB

image/spectrum_N3.png

已删除

100644 → 0

23.2 KB

| W: | H:

| W: | H:

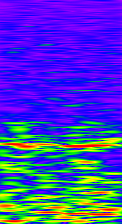

image/spectrum_Stage1.png

0 → 100644

20.2 KB

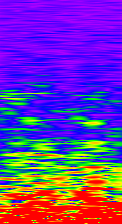

image/spectrum_Stage2.png

0 → 100644

20.1 KB

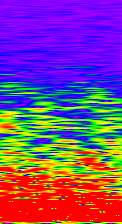

image/spectrum_Stage3.png

0 → 100644

20.0 KB

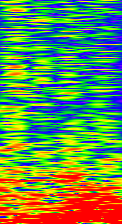

image/spectrum_Wake.png

0 → 100644

25.0 KB

image/spectrum_wake.png

已删除

100644 → 0

28.1 KB

transformer.py

0 → 100644