docs: update docs and server ports

Showing

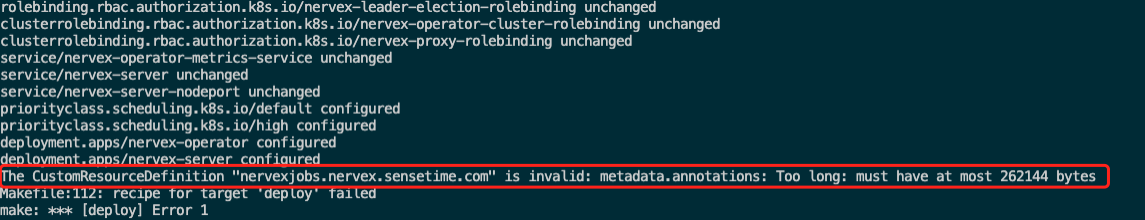

docs/images/deploy-failed.png

已删除

100644 → 0

72.5 KB

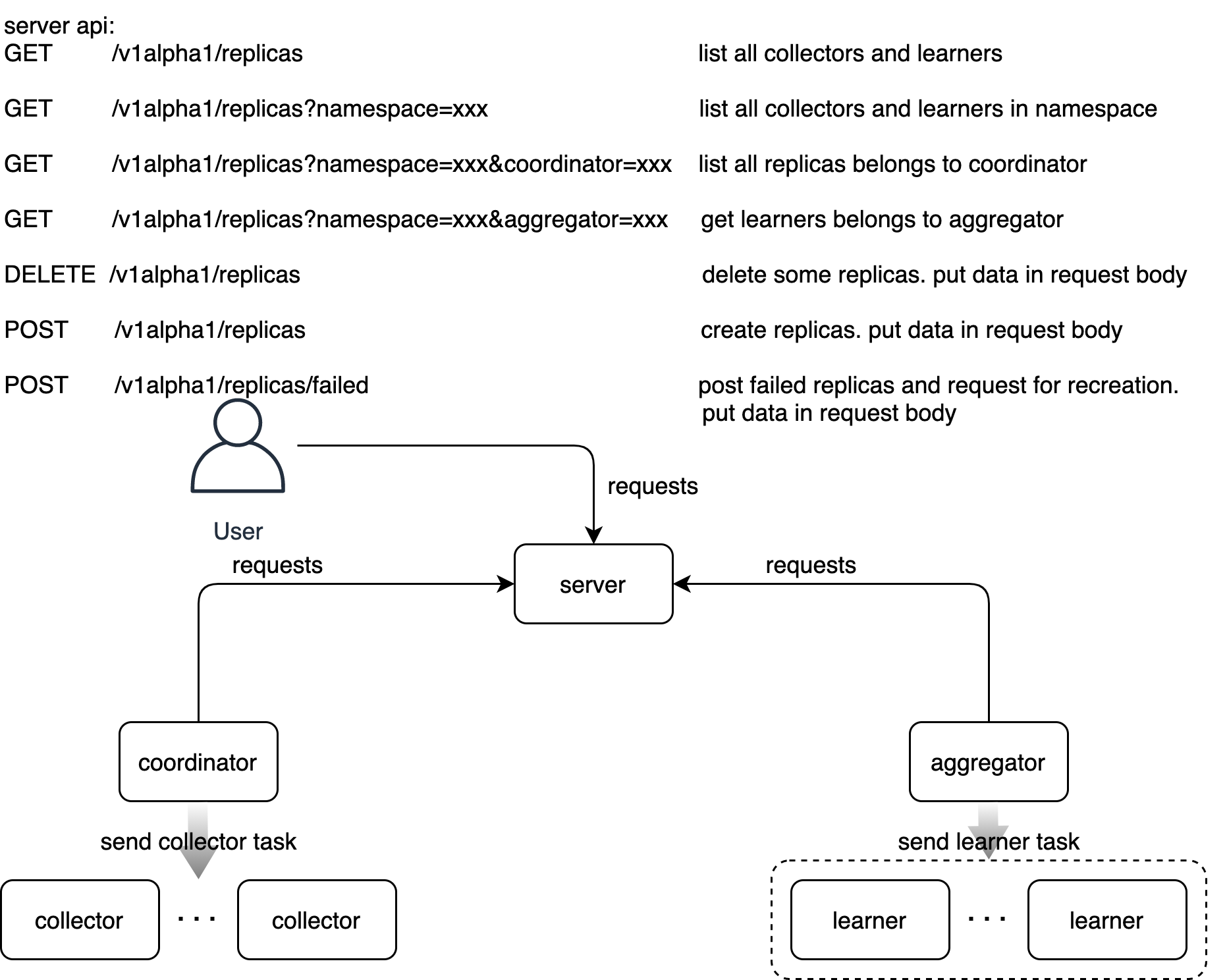

docs/images/di-api.png

已删除

100644 → 0

235.0 KB

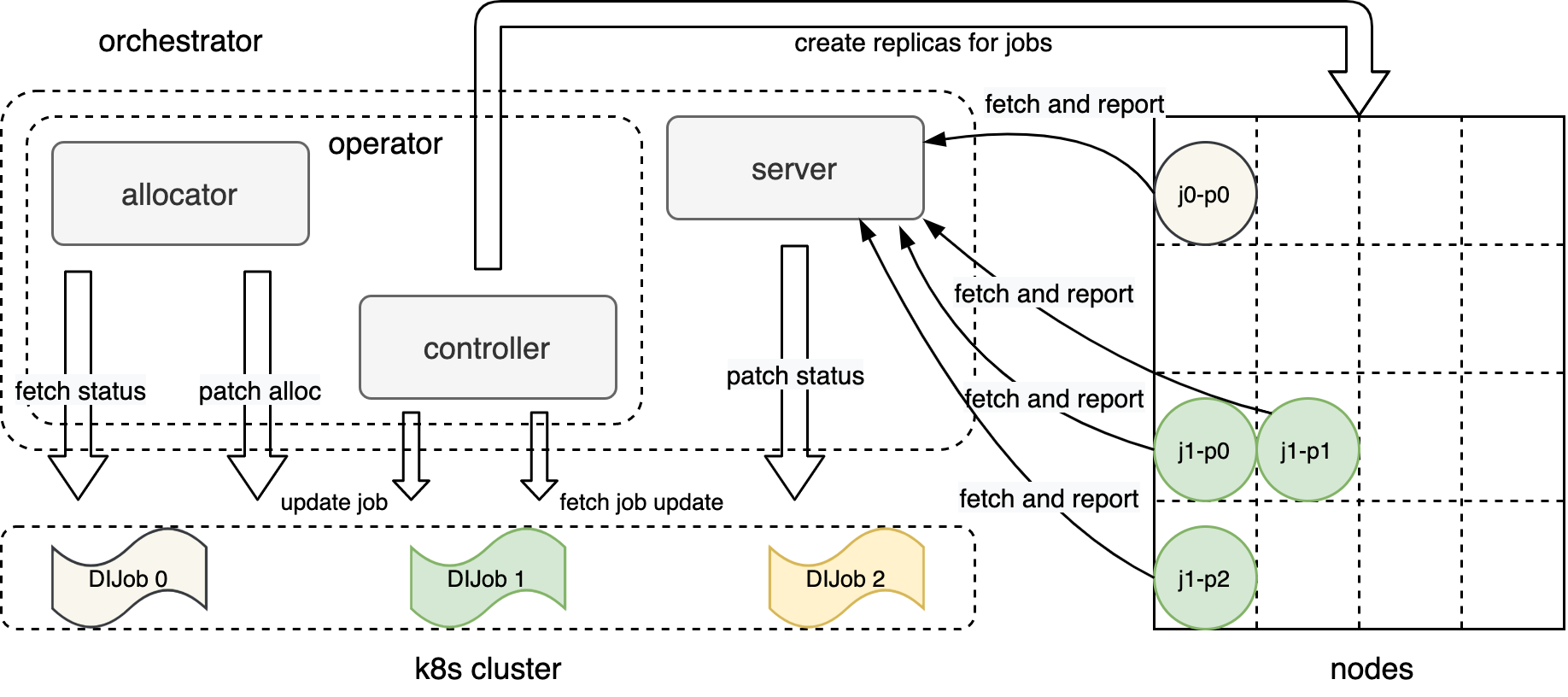

docs/images/di-arch.svg

已删除

100644 → 0

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

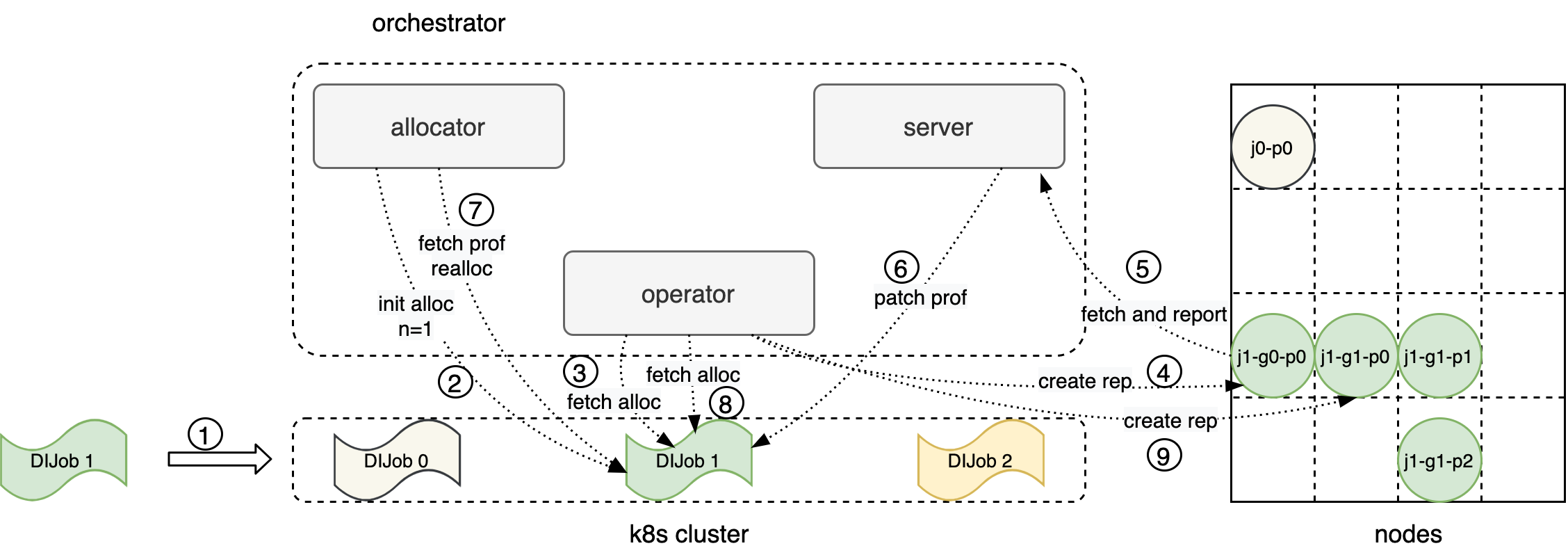

docs/images/di-engine-arch.png

0 → 100644

201.2 KB

210.2 KB

184.6 KB