1.0.0rc3: Face+Debug+MPI render improved+minor bugs fixed (see release_notes)

Showing

doc/media/keypoints_face.png

0 → 100644

34.3 KB

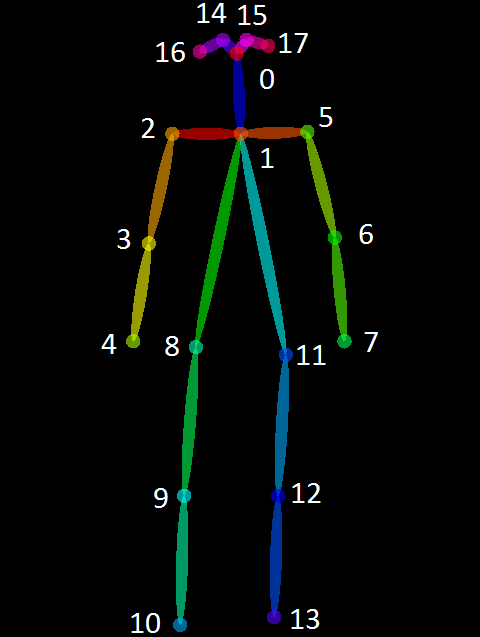

doc/media/keypoints_pose.png

0 → 100644

10.9 KB

| W: | H:

| W: | H:

doc/release_notes.md

0 → 100644

include/openpose/core/point.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

models/face/pose_deploy.prototxt

0 → 100644

此差异已折叠。

此差异已折叠。

src/openpose/core/point.cpp

0 → 100644

此差异已折叠。

src/openpose/core/rectangle.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。