Pulsar website using docusaurus (#2206)

### Motivation Improve the documentation and usability of the pulsar website. This moves the website and documentation to a new framework (https://docusaurus.io/) which will make it easier to maintain going forward. ### Modifications A new version of the website in site2 directory. Also updates the pulsar build docker to add the new website build dependencies. ### Result A more usable website and documentation. A preview of the site can be seen here: https://cckellogg.github.io/incubator-pulsar *All the links and images might not work on this site since it's a test only site*

Showing

site2/.gitignore

0 → 100644

site2/README.md

0 → 100644

site2/docs/adaptors-kafka.md

0 → 100644

此差异已折叠。

site2/docs/adaptors-spark.md

0 → 100644

site2/docs/adaptors-storm.md

0 → 100644

site2/docs/admin-api-brokers.md

0 → 100644

site2/docs/admin-api-clusters.md

0 → 100644

site2/docs/admin-api-overview.md

0 → 100644

site2/docs/admin-api-tenants.md

0 → 100644

site2/docs/administration-auth.md

0 → 100644

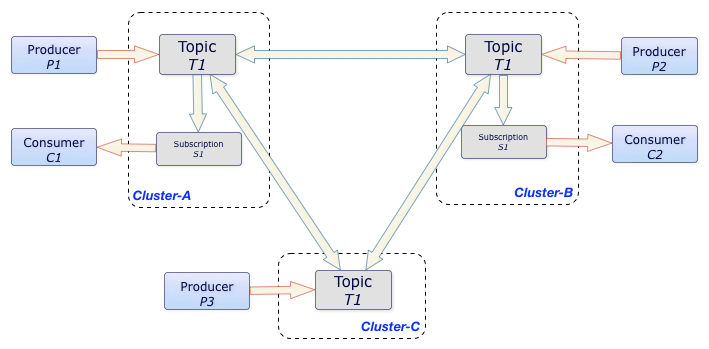

site2/docs/administration-geo.md

0 → 100644

10.0 KB

31.5 KB

32.3 KB

28.4 KB

133.0 KB

652.8 KB

258.5 KB

512.2 KB

112.7 KB

393.4 KB

673.6 KB

195.9 KB

141.1 KB

126.2 KB

683.2 KB

1.5 MB

99.7 KB

270.5 KB

380.5 KB

133.3 KB

1.5 MB

219.4 KB

234.6 KB

112.7 KB

84.1 KB

114.0 KB

122.6 KB

122.7 KB

30.5 KB

30.2 KB

58.0 KB

63.1 KB

75.3 KB

60.6 KB

83.3 KB

site2/docs/assets/pulsar-io.png

0 → 100644

36.4 KB

128.7 KB

64.4 KB

67.3 KB

64.6 KB

302.3 KB

9.1 KB

111.1 KB

site2/docs/client-libraries-go.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/docs/deploy-aws.md

0 → 100644

此差异已折叠。

此差异已折叠。

site2/docs/deploy-bare-metal.md

0 → 100644

此差异已折叠。

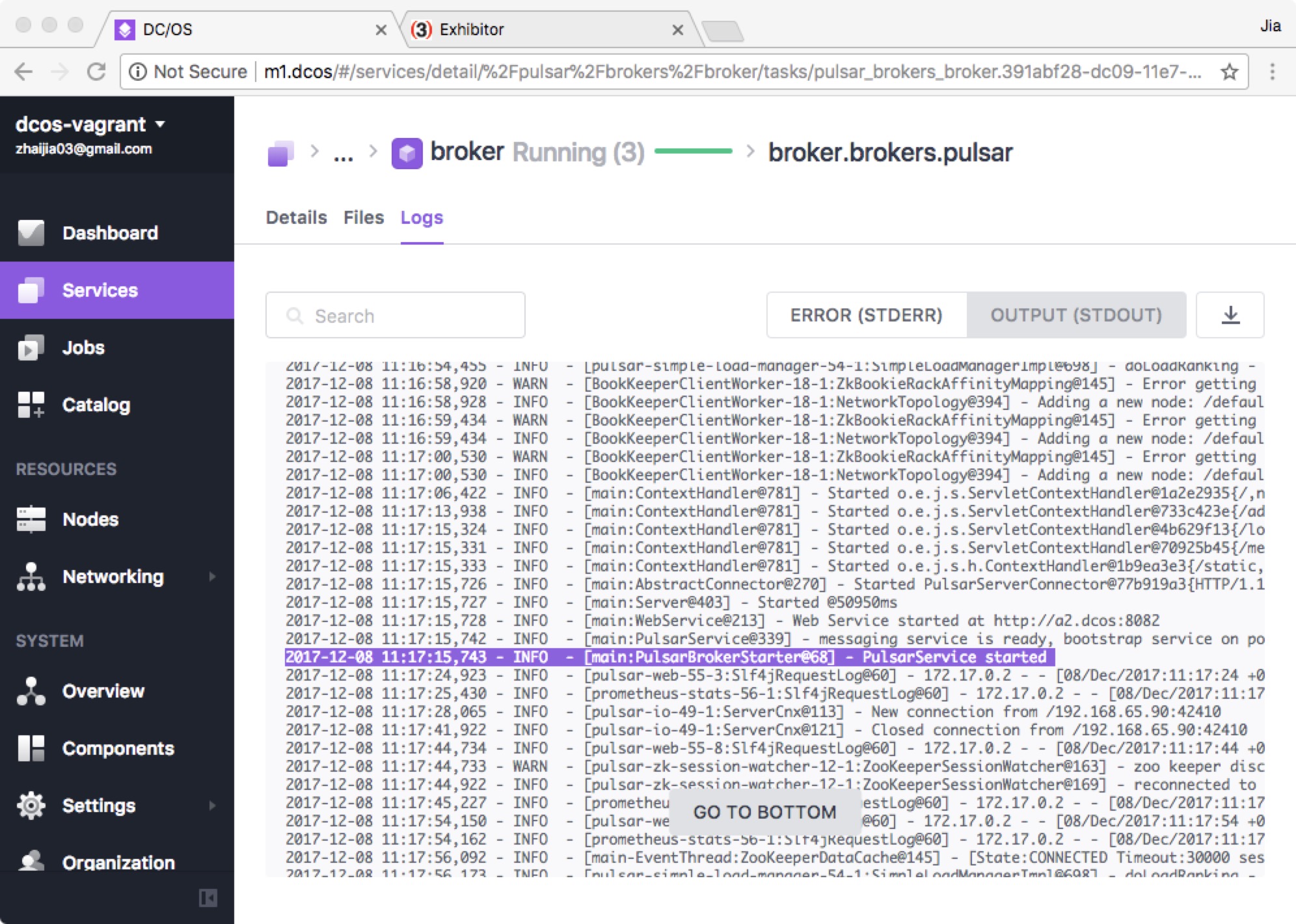

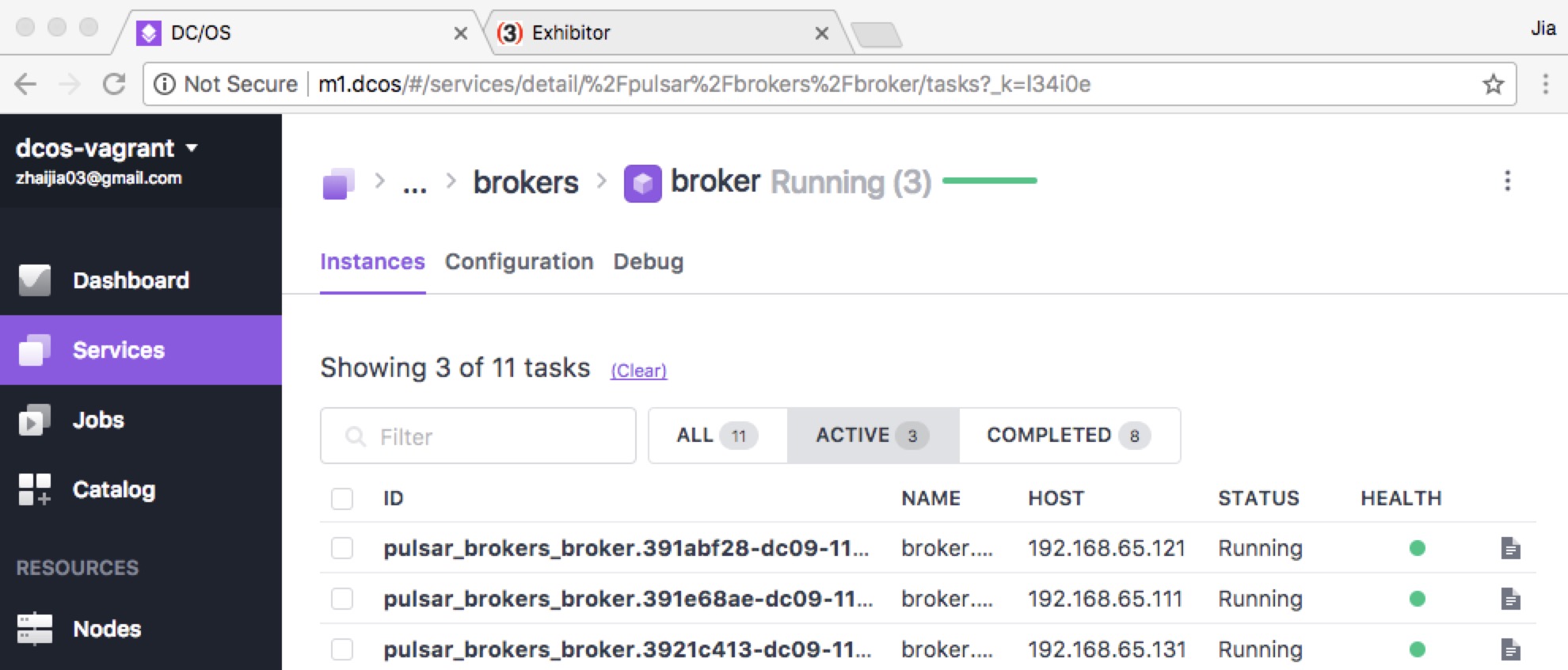

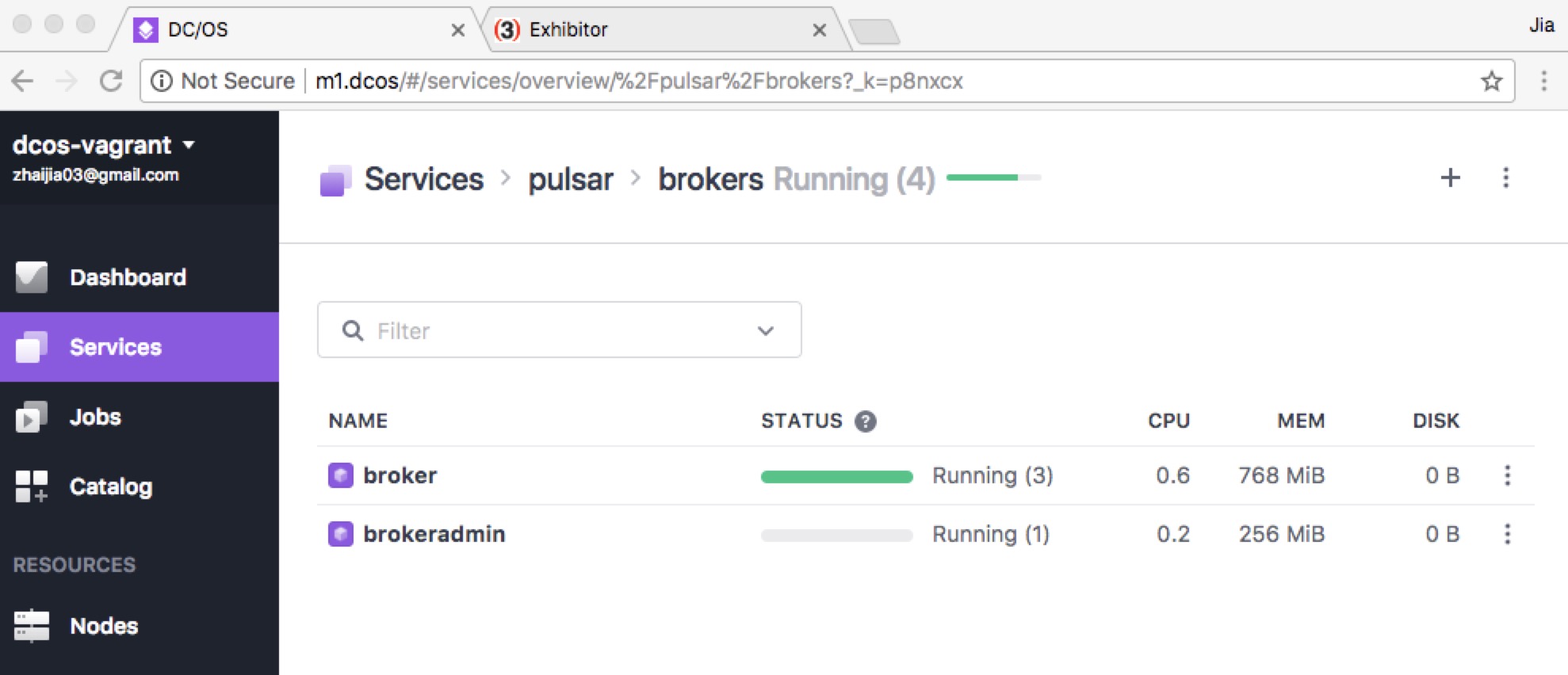

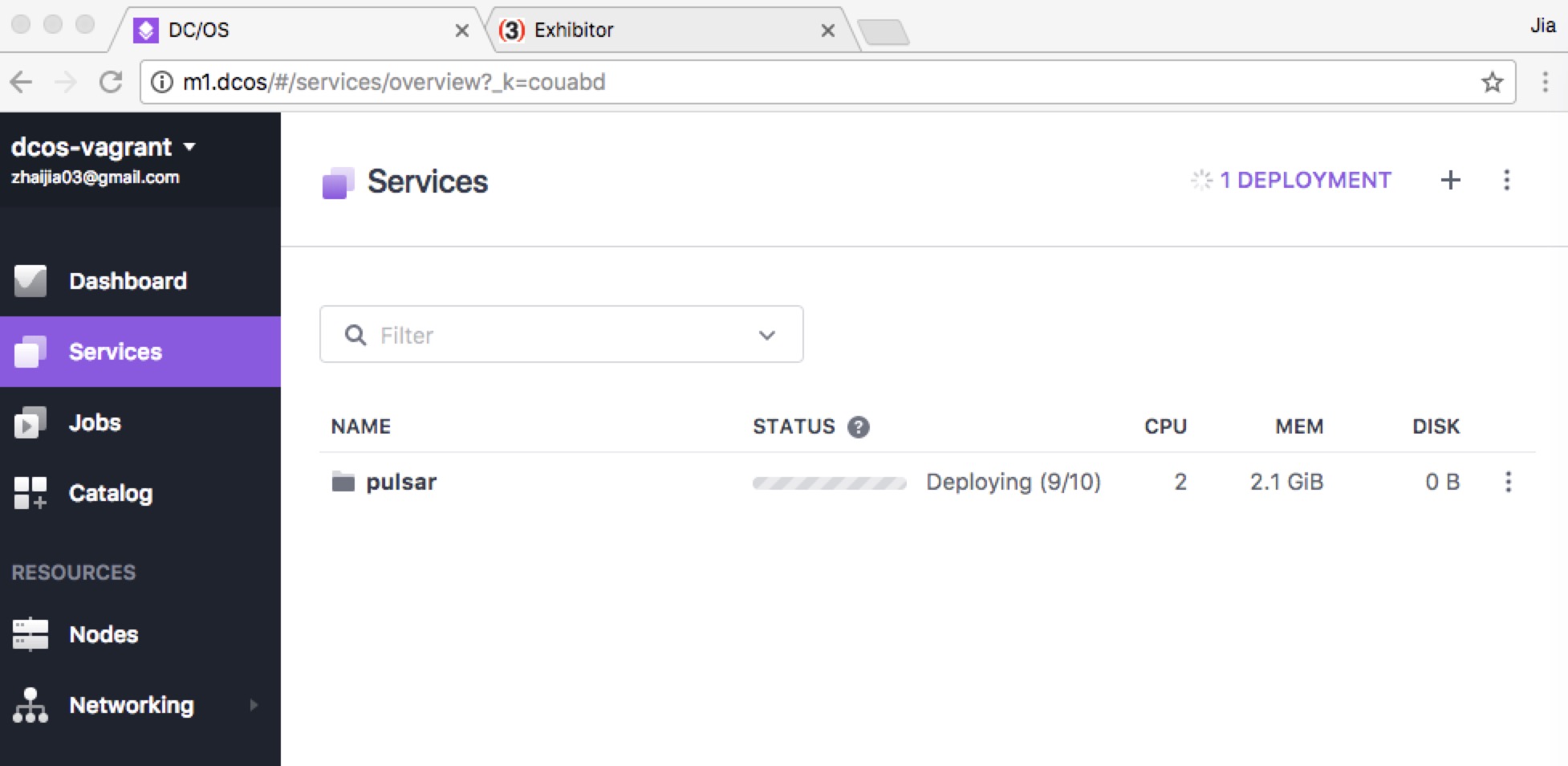

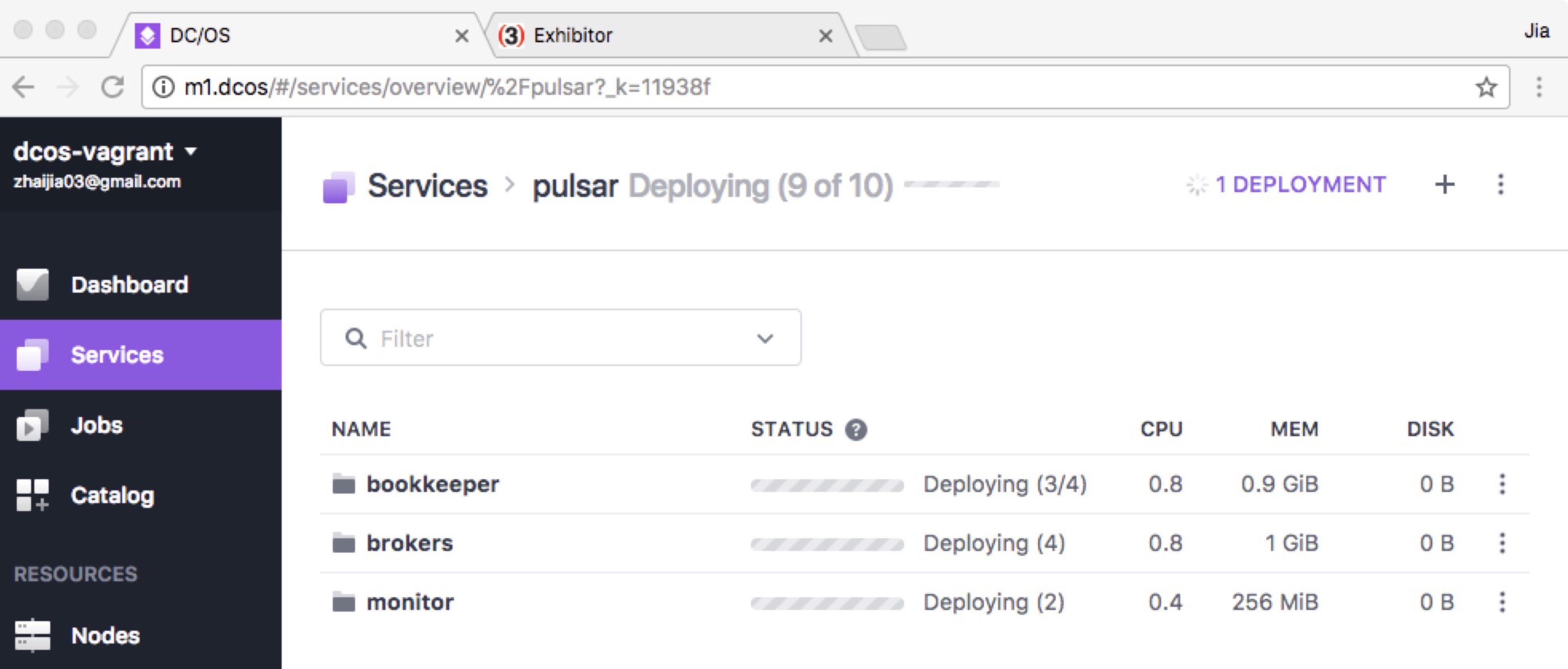

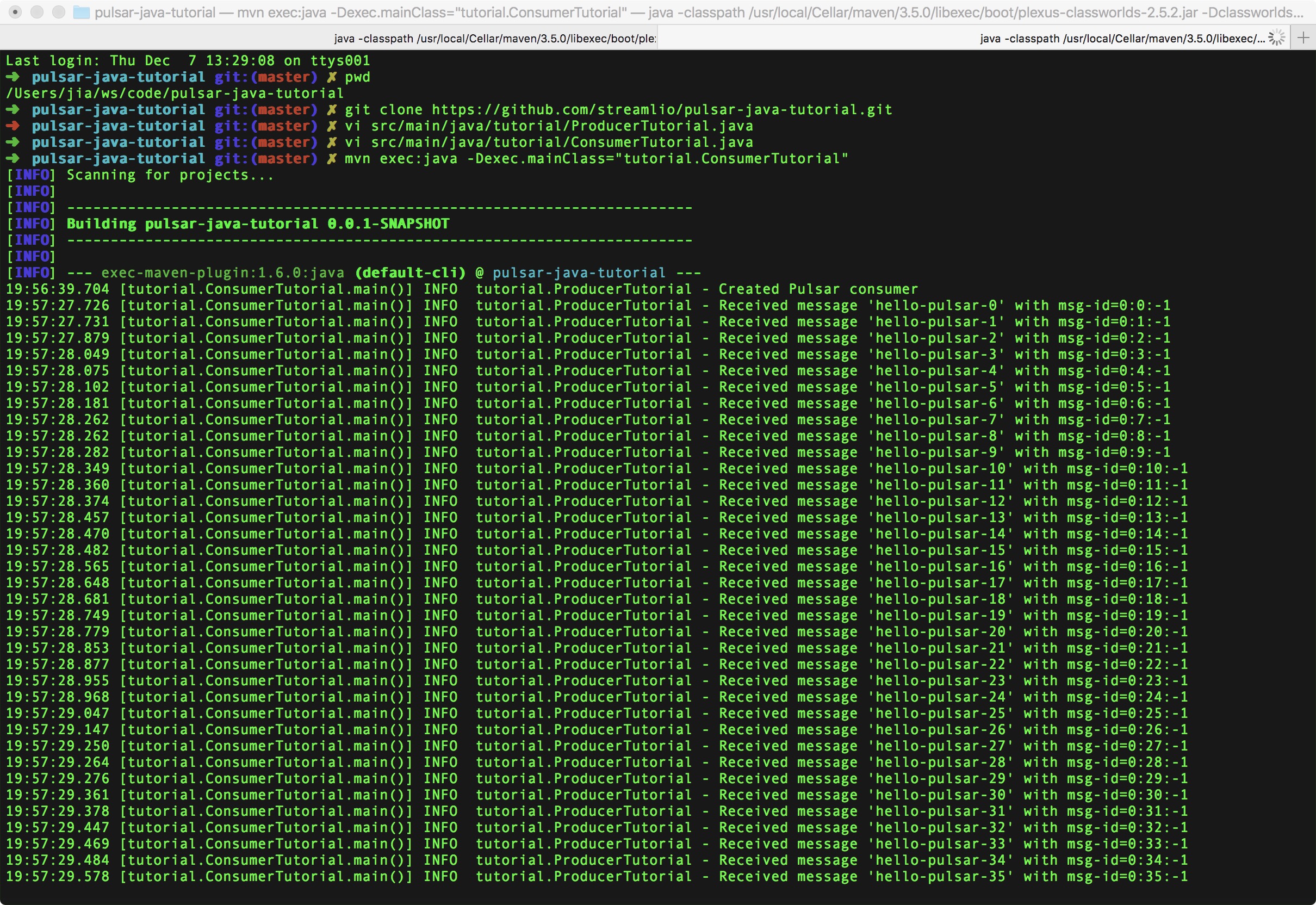

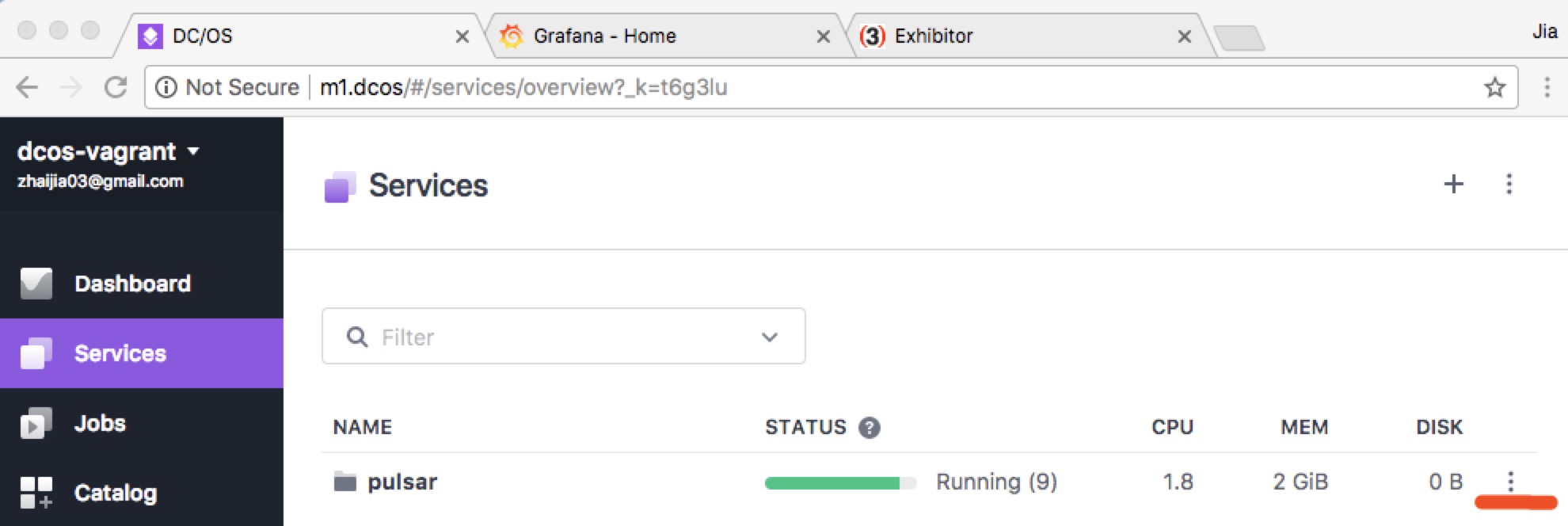

site2/docs/deploy-dcos.md

0 → 100644

此差异已折叠。

site2/docs/deploy-kubernetes.md

0 → 100644

此差异已折叠。

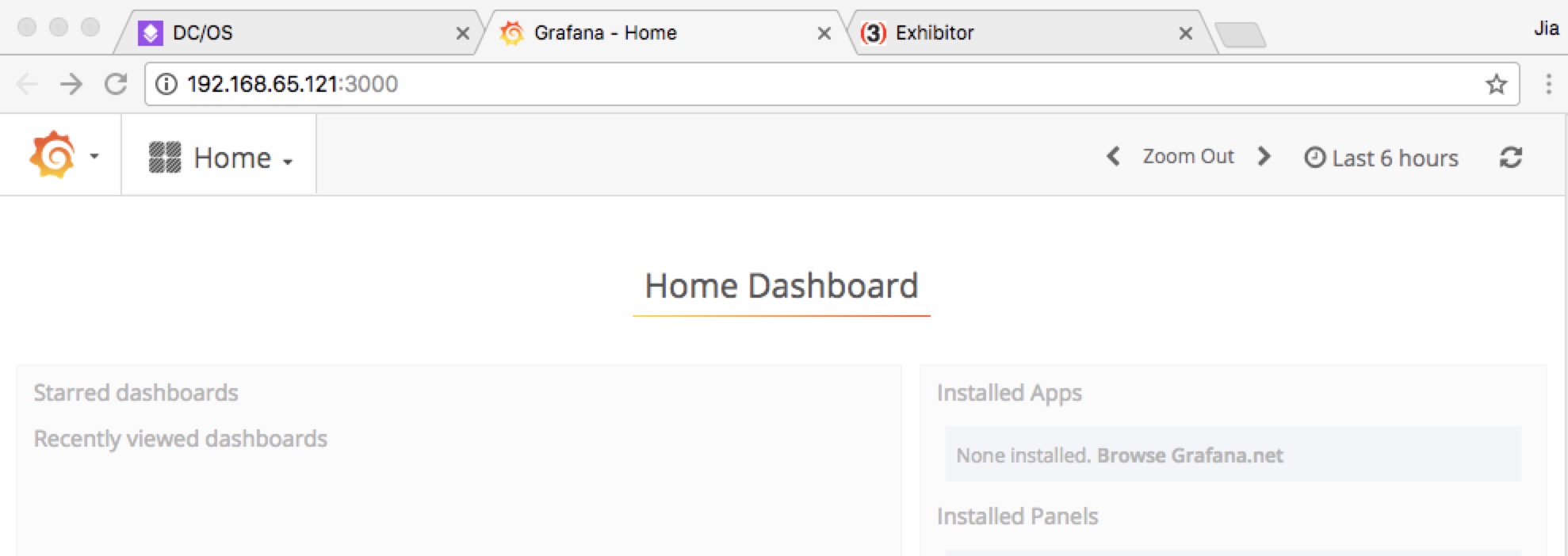

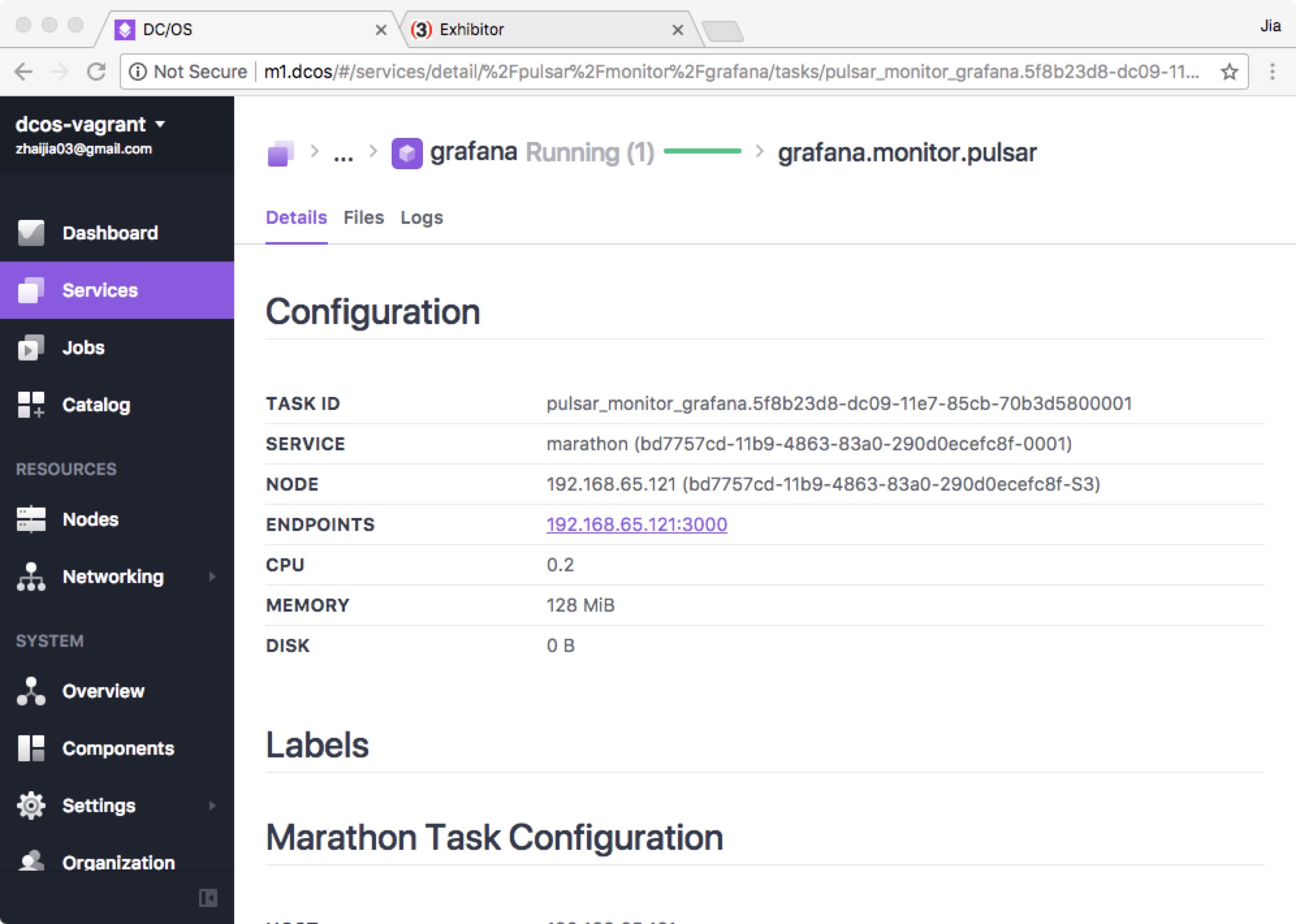

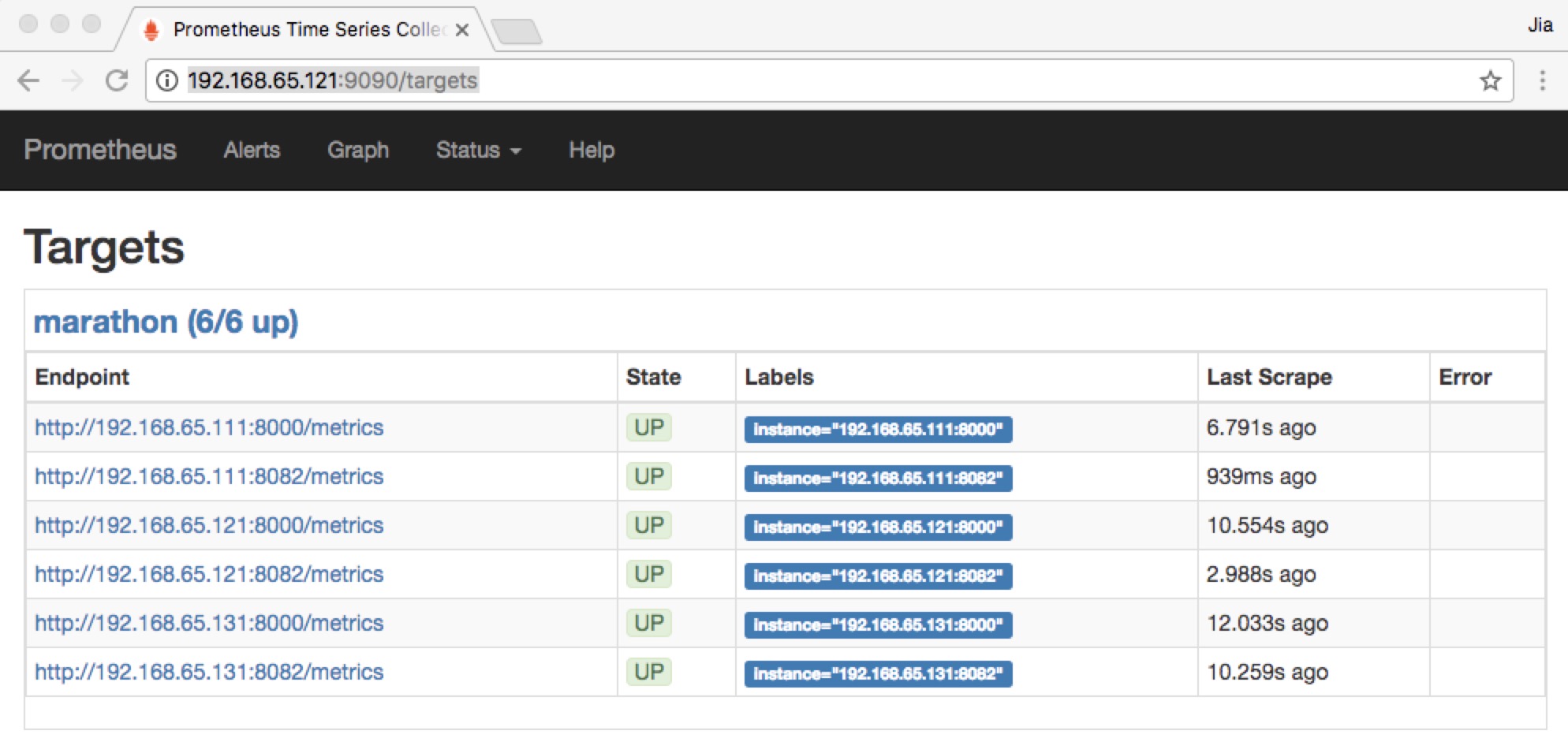

site2/docs/deploy-monitoring.md

0 → 100644

此差异已折叠。

此差异已折叠。

site2/docs/developing-codebase.md

0 → 100644

site2/docs/developing-cpp.md

0 → 100644

此差异已折叠。

此差异已折叠。

site2/docs/developing-schema.md

0 → 100644

此差异已折叠。

site2/docs/developing-tools.md

0 → 100644

此差异已折叠。

site2/docs/functions-api.md

0 → 100644

此差异已折叠。

site2/docs/functions-deploying.md

0 → 100644

此差异已折叠。

此差异已折叠。

site2/docs/functions-metrics.md

0 → 100644

此差异已折叠。

site2/docs/functions-overview.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/docs/io-overview.md

0 → 100644

此差异已折叠。

site2/docs/io-quickstart.md

0 → 100644

此差异已折叠。

site2/docs/reference-auth.md

0 → 100644

此差异已折叠。

site2/docs/reference-cli-tools.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/docs/reference-rest-api.md

0 → 100644

此差异已折叠。

site2/docs/security-athenz.md

0 → 100644

此差异已折叠。

此差异已折叠。

site2/docs/security-encryption.md

0 → 100644

此差异已折叠。

site2/docs/security-extending.md

0 → 100644

此差异已折叠。

site2/docs/security-overview.md

0 → 100644

此差异已折叠。

site2/docs/security-tls.md

0 → 100644

此差异已折叠。

site2/tools/docker-build-site.sh

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/website/core/Footer.js

0 → 100644

此差异已折叠。

site2/website/data/resources.js

0 → 100644

此差异已折叠。

site2/website/data/team.js

0 → 100644

此差异已折叠。

此差异已折叠。

site2/website/package.json

0 → 100644

此差异已折叠。

此差异已折叠。

site2/website/pages/en/contact.js

0 → 100644

此差异已折叠。

此差异已折叠。

site2/website/pages/en/events.js

0 → 100644

此差异已折叠。

site2/website/pages/en/index.js

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/website/pages/en/team.js

0 → 100644

此差异已折叠。

此差异已折叠。

site2/website/release-notes.md

0 → 100644

此差异已折叠。

site2/website/releases.json

0 → 100644

此差异已折叠。

site2/website/scripts/replace.js

0 → 100644

此差异已折叠。

此差异已折叠。

site2/website/sidebars.json

0 → 100644

此差异已折叠。

site2/website/siteConfig.js

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

site2/website/static/js/custom.js

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。