First commit

Showing

README_CN.md

0 → 100644

img2shell.py

0 → 100644

imgs/colorbar.png

0 → 100644

498 字节

imgs/colorbar_highlight.png

0 → 100644

636 字节

imgs/kun.gif

0 → 100644

2.4 MB

imgs/logo.png

0 → 100644

39.9 KB

imgs/logo.psd

0 → 100644

文件已添加

imgs/logo_char.png

0 → 100644

8.9 KB

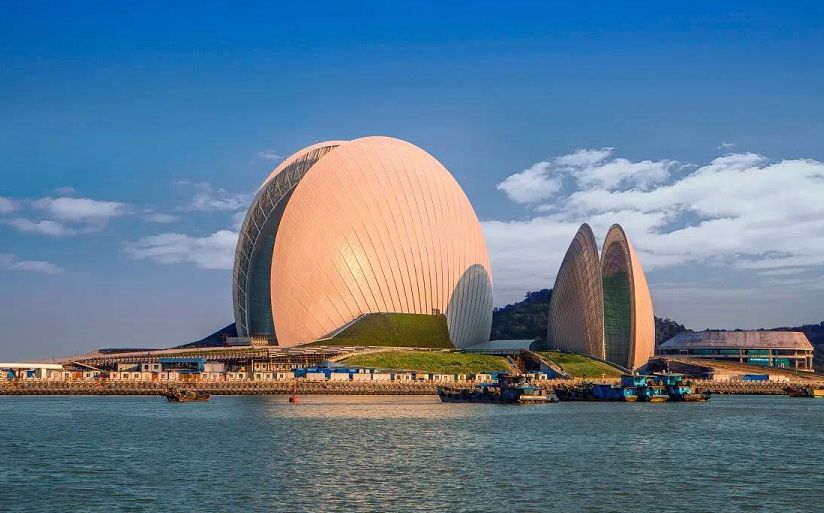

imgs/test.jpg

0 → 100644

71.8 KB

imgs/test_char.png

0 → 100644

19.4 KB

options.py

0 → 100644

play.py

0 → 100644

util/ffmpeg.py

0 → 100644

util/util.py

0 → 100644