refine readme, remove the out-of-date docs

Signed-off-by: NFeynmanZhou <pengfeizhou@yunify.com>

Showing

docs/cla.md

已删除

100644 → 0

docs/en/guides/README.md

已删除

100644 → 0

docs/en/guides/screenshots.md

已删除

100644 → 0

docs/images/app-store.png

0 → 100644

549.0 KB

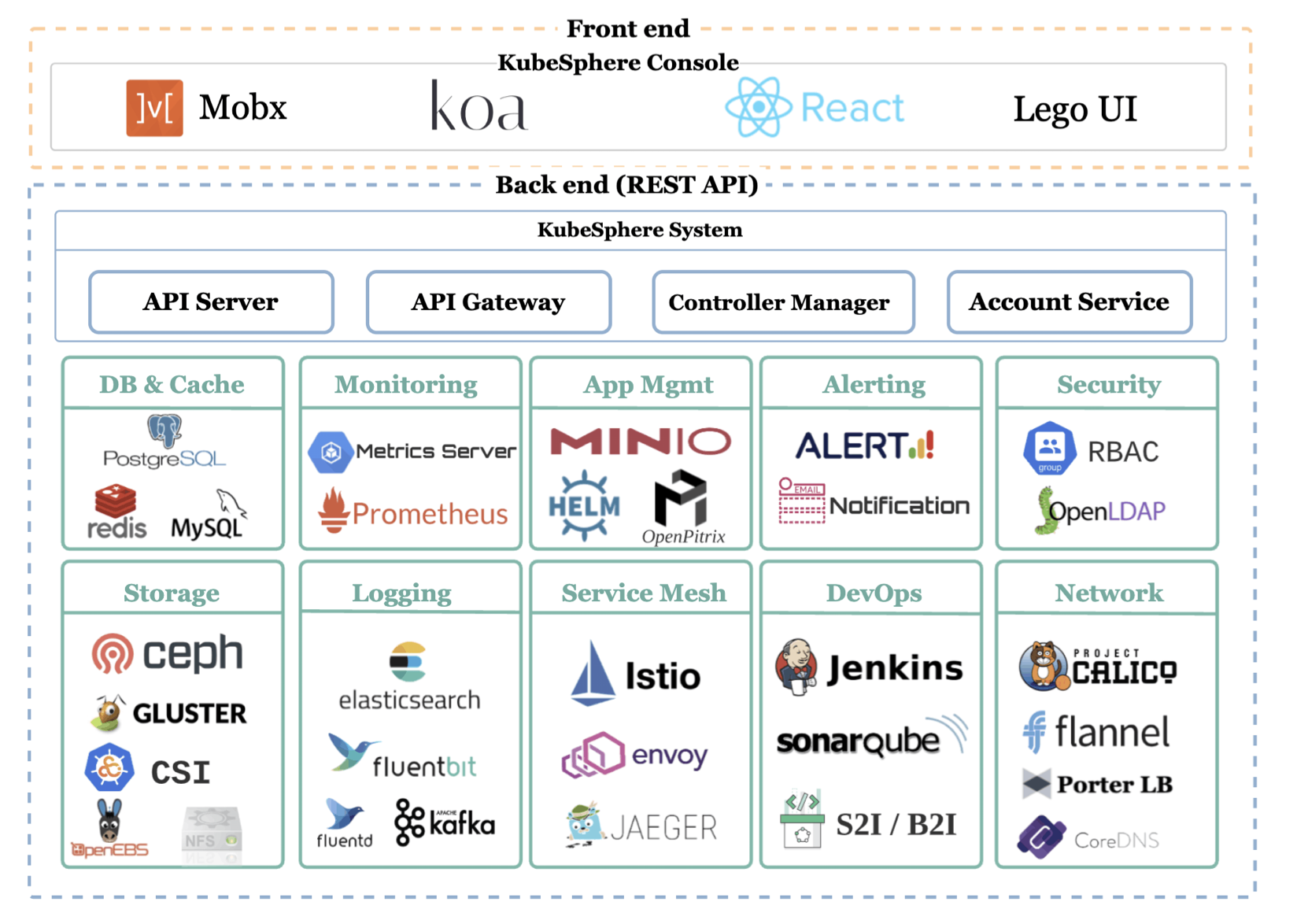

docs/images/architecture.png

0 → 100644

643.3 KB

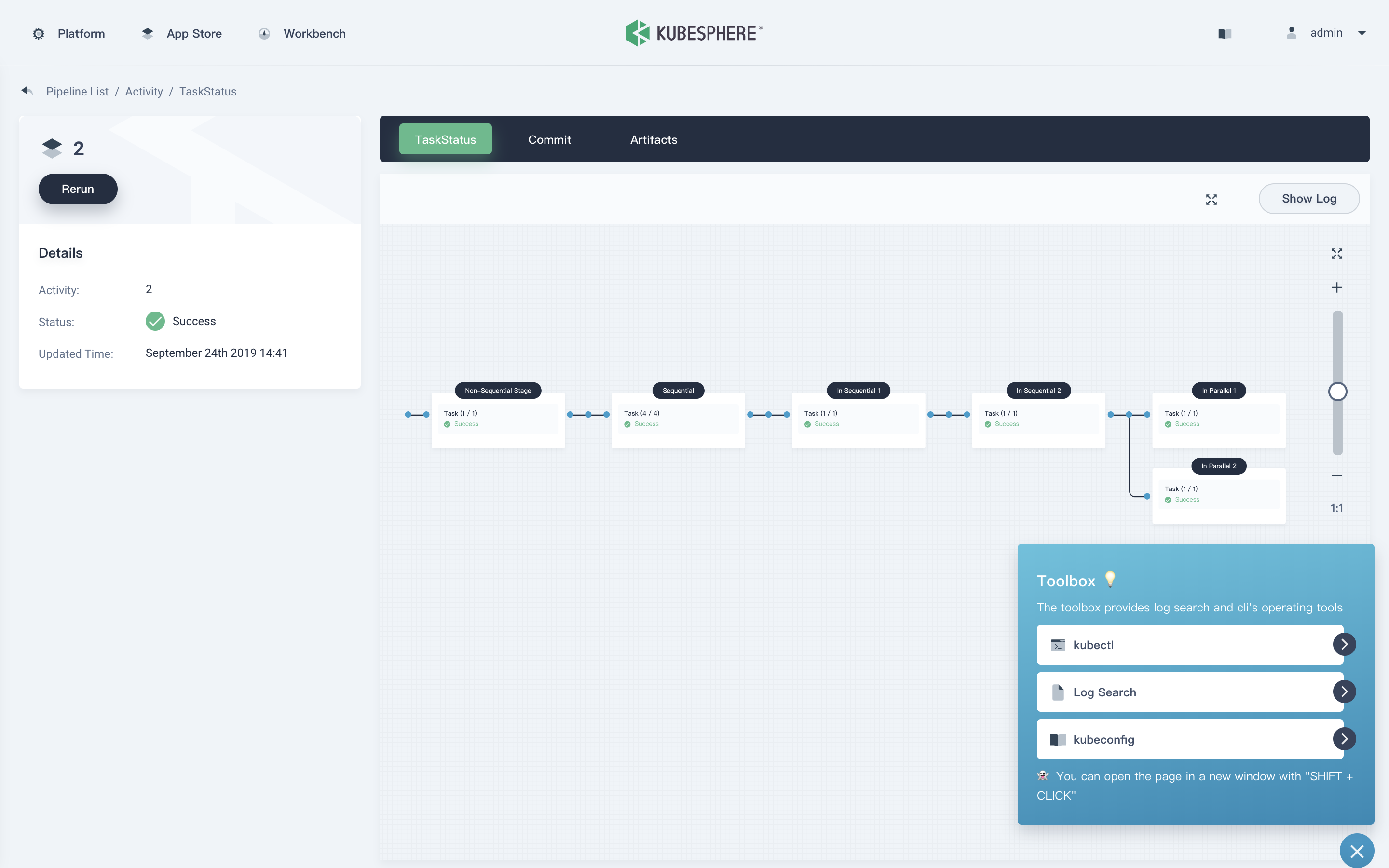

docs/images/cicd.png

0 → 100644

592.5 KB

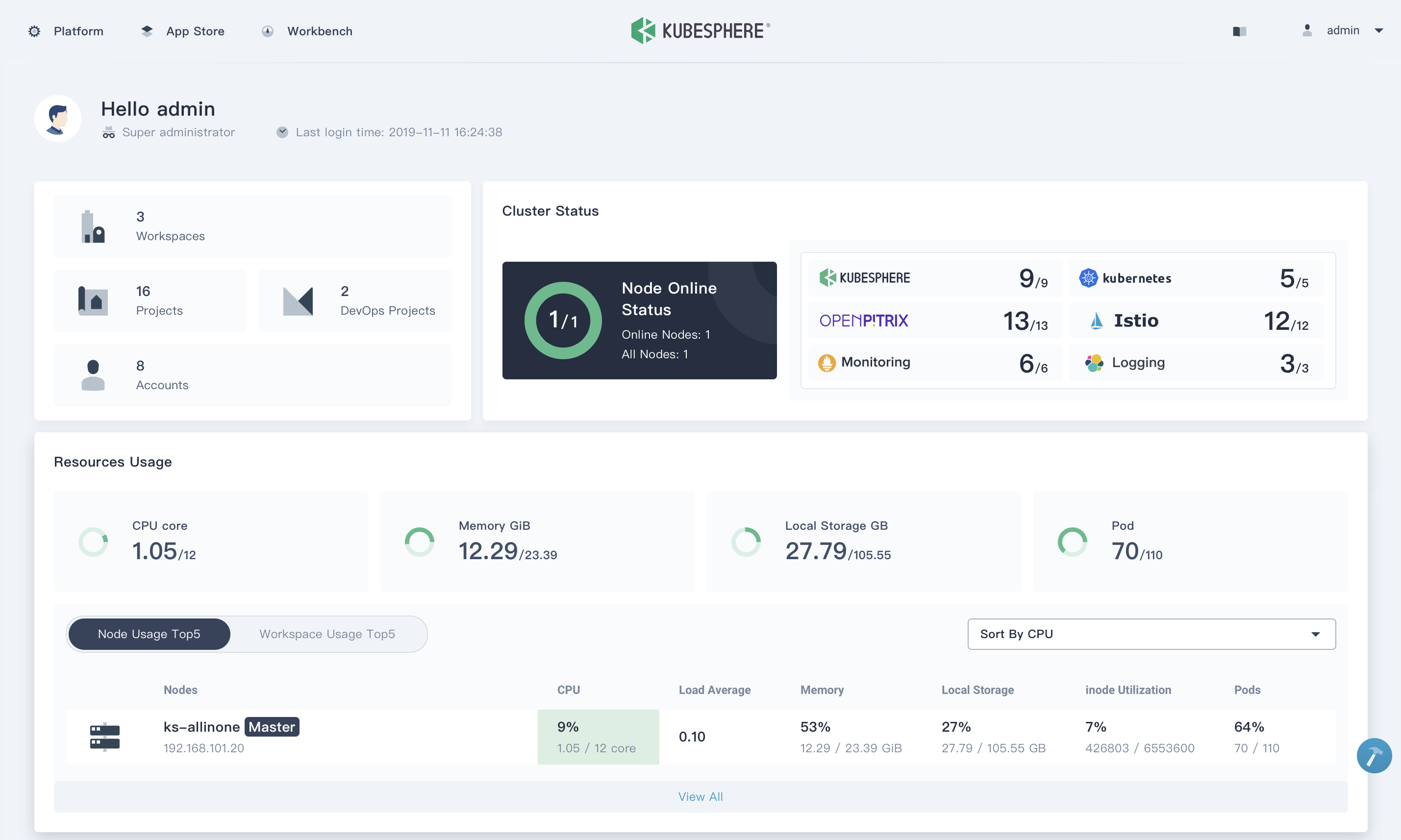

docs/images/console.png

0 → 100644

352.9 KB

515.9 KB

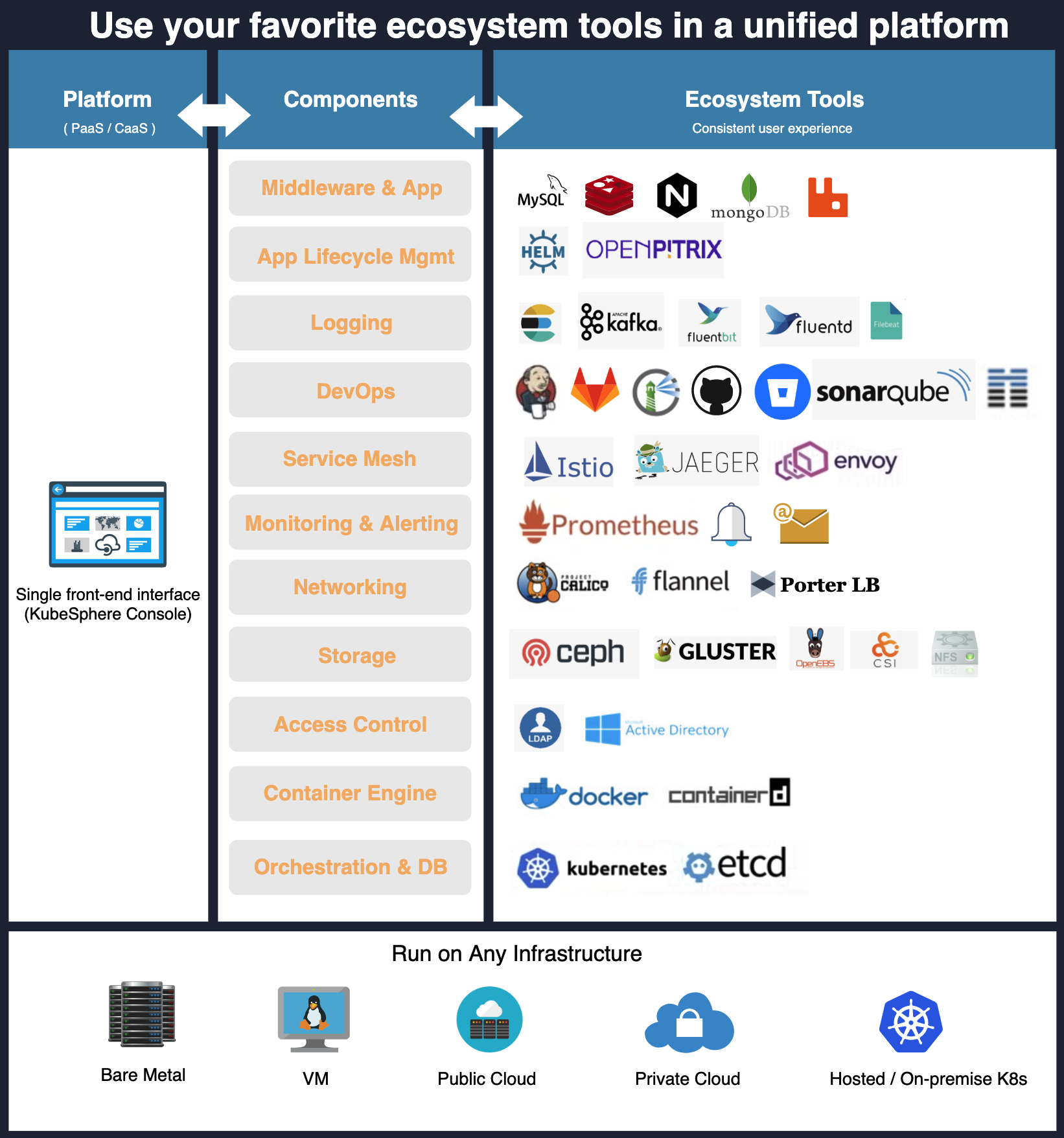

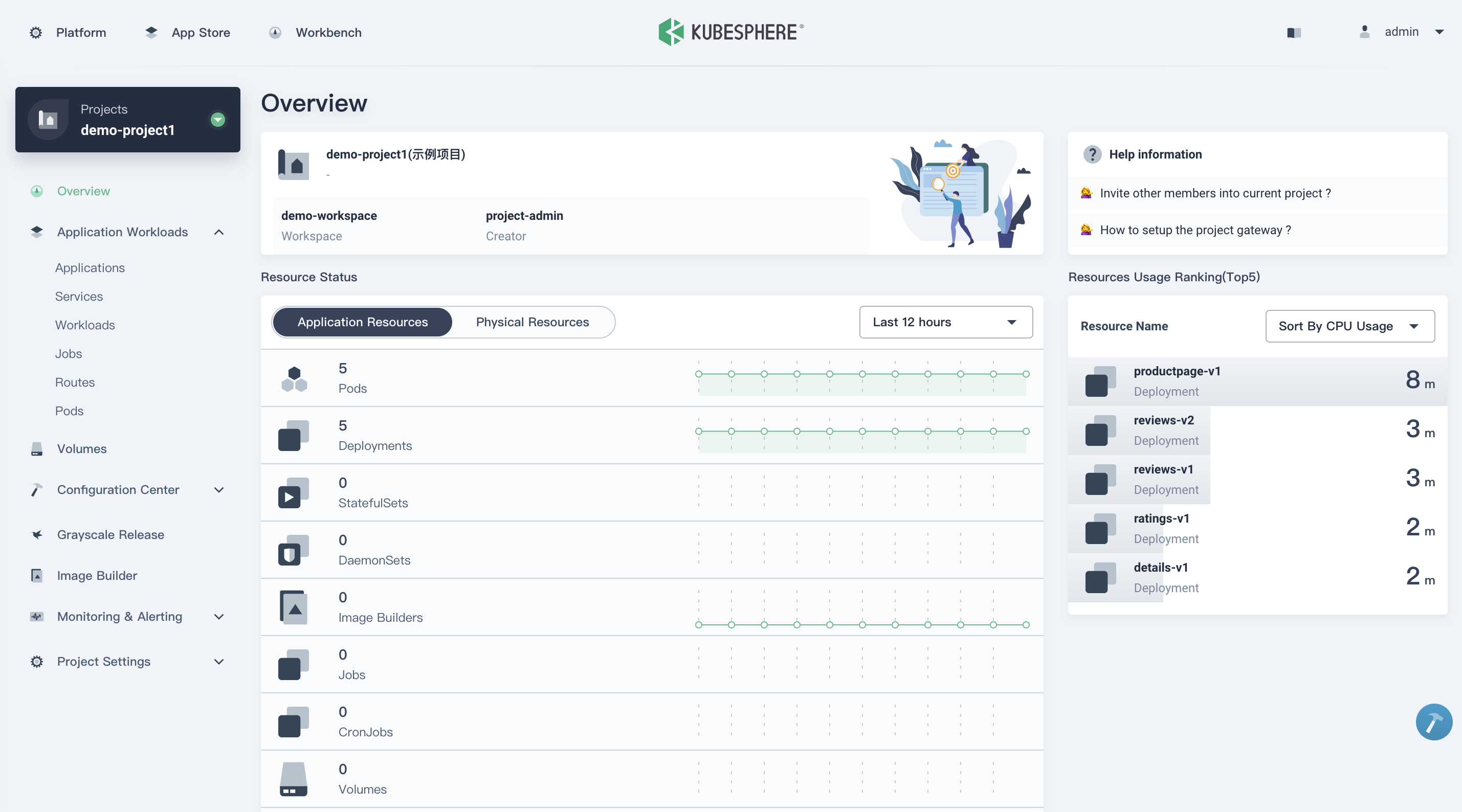

docs/images/project.png

0 → 100644

414.3 KB