Initial commit

Showing

.Doxyfile

0 → 100644

此差异已折叠。

.travis.yml

0 → 100644

CMakeLists.txt

0 → 100644

CONTRIBUTING.md

0 → 100644

CONTRIBUTORS.md

0 → 100644

INSTALL.md

0 → 100644

LICENSE

0 → 100644

Makefile

0 → 100644

此差异已折叠。

Makefile.config.example

0 → 100644

README.md

0 → 100644

README_BVLC.md

0 → 100644

caffe.cloc

0 → 100644

cmake/ConfigGen.cmake

0 → 100644

cmake/Cuda.cmake

0 → 100644

cmake/Dependencies.cmake

0 → 100644

cmake/External/gflags.cmake

0 → 100644

cmake/External/glog.cmake

0 → 100644

cmake/Misc.cmake

0 → 100644

cmake/Modules/FindAtlas.cmake

0 → 100644

cmake/Modules/FindGFlags.cmake

0 → 100644

cmake/Modules/FindGlog.cmake

0 → 100644

cmake/Modules/FindLAPACK.cmake

0 → 100644

cmake/Modules/FindLMDB.cmake

0 → 100644

cmake/Modules/FindLevelDB.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindMKL.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindMatlabMex.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindNCCL.cmake

0 → 100644

cmake/Modules/FindNumPy.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindOpenBLAS.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindSnappy.cmake

0 → 100644

此差异已折叠。

cmake/Modules/FindvecLib.cmake

0 → 100644

此差异已折叠。

cmake/ProtoBuf.cmake

0 → 100644

此差异已折叠。

cmake/Summary.cmake

0 → 100644

此差异已折叠。

cmake/Targets.cmake

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

cmake/Templates/caffe_config.h.in

0 → 100644

此差异已折叠。

cmake/Uninstall.cmake.in

0 → 100644

此差异已折叠。

cmake/Utils.cmake

0 → 100644

此差异已折叠。

cmake/lint.cmake

0 → 100644

此差异已折叠。

devtools/pylint.rc

0 → 100644

此差异已折叠。

docker/README.md

0 → 100644

此差异已折叠。

docker/cpu/Dockerfile

0 → 100644

此差异已折叠。

docker/gpu/Dockerfile

0 → 100644

此差异已折叠。

docs/CMakeLists.txt

0 → 100644

此差异已折叠。

docs/CNAME

0 → 100644

docs/README.md

0 → 100644

docs/_config.yml

0 → 100644

此差异已折叠。

docs/_layouts/default.html

0 → 100644

此差异已折叠。

docs/development.md

0 → 100644

此差异已折叠。

docs/images/GitHub-Mark-64px.png

0 → 100644

2.6 KB

docs/images/caffeine-icon.png

0 → 100644

954 字节

docs/index.md

0 → 100644

此差异已折叠。

docs/install_apt.md

0 → 100644

此差异已折叠。

docs/install_apt_debian.md

0 → 100644

此差异已折叠。

docs/install_osx.md

0 → 100644

此差异已折叠。

docs/install_yum.md

0 → 100644

此差异已折叠。

docs/installation.md

0 → 100644

此差异已折叠。

docs/model_zoo.md

0 → 100644

此差异已折叠。

docs/multigpu.md

0 → 100644

此差异已折叠。

docs/stylesheets/pygment_trac.css

0 → 100644

此差异已折叠。

docs/stylesheets/reset.css

0 → 100644

此差异已折叠。

docs/stylesheets/styles.css

0 → 100644

此差异已折叠。

docs/tutorial/convolution.md

0 → 100644

此差异已折叠。

docs/tutorial/data.md

0 → 100644

此差异已折叠。

docs/tutorial/fig/.gitignore

0 → 100644

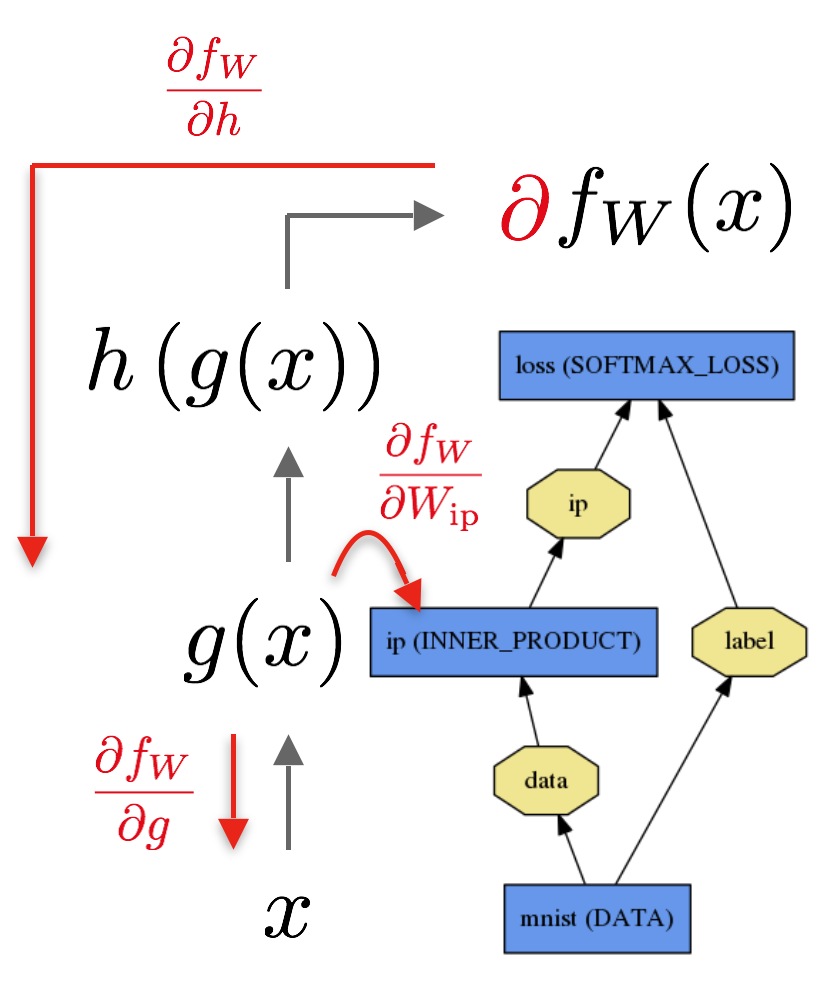

docs/tutorial/fig/backward.jpg

0 → 100644

102.6 KB

docs/tutorial/fig/forward.jpg

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/fig/layer.jpg

0 → 100644

此差异已折叠。

docs/tutorial/fig/logreg.jpg

0 → 100644

此差异已折叠。

docs/tutorial/forward_backward.md

0 → 100644

此差异已折叠。

docs/tutorial/index.md

0 → 100644

此差异已折叠。

docs/tutorial/interfaces.md

0 → 100644

此差异已折叠。

docs/tutorial/layers.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/absval.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/accuracy.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/argmax.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/batchnorm.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/bias.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/bnll.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/concat.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/crop.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/data.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/dropout.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/dummydata.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/eltwise.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/elu.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/embed.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/exp.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/filter.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/flatten.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/hdf5data.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/hingeloss.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/im2col.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/imagedata.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/input.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/log.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/lrn.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/lstm.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/mvn.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/parameter.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/pooling.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/power.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/prelu.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/python.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/recurrent.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/reduction.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/relu.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/reshape.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/rnn.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/scale.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/sigmoid.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/silence.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/slice.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/softmax.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/layers/split.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/spp.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/tanh.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/threshold.md

0 → 100644

此差异已折叠。

docs/tutorial/layers/tile.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/tutorial/loss.md

0 → 100644

此差异已折叠。

docs/tutorial/net_layer_blob.md

0 → 100644

此差异已折叠。

docs/tutorial/solver.md

0 → 100644

此差异已折叠。

examples/00-classification.ipynb

0 → 100644

此差异已折叠。

examples/01-learning-lenet.ipynb

0 → 100644

此差异已折叠。

examples/02-fine-tuning.ipynb

0 → 100644

此差异已折叠。

examples/CMakeLists.txt

0 → 100644

此差异已折叠。

examples/brewing-logreg.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/cifar10/readme.md

0 → 100644

此差异已折叠。

examples/cifar10/train_full.sh

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/cifar10/train_quick.sh

0 → 100644

此差异已折叠。

examples/convert_model.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/detection.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/imagenet/readme.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/images/cat gray.jpg

0 → 100644

此差异已折叠。

examples/images/cat.jpg

0 → 100644

此差异已折叠。

examples/images/cat_gray.jpg

0 → 100644

此差异已折叠。

examples/images/cropped_panda.jpg

0 → 100644

此差异已折叠。

examples/images/fish-bike.jpg

0 → 100644

此差异已折叠。

examples/inceptionv3.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

examples/mnist/create_mnist.sh

0 → 100644

此差异已折叠。

examples/mnist/lenet.prototxt

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/mnist/readme.md

0 → 100644

此差异已折叠。

examples/mnist/train_lenet.sh

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/net_surgery.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/pycaffe/caffenet.py

0 → 100644

此差异已折叠。

此差异已折叠。

examples/pycaffe/layers/pyloss.py

0 → 100644

此差异已折叠。

examples/pycaffe/linreg.prototxt

0 → 100644

此差异已折叠。

examples/pycaffe/tools.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

examples/siamese/readme.md

0 → 100644

此差异已折叠。

此差异已折叠。

examples/ssd.ipynb

0 → 100644

此差异已折叠。

examples/ssd/plot_detections.py

0 → 100644

此差异已折叠。

examples/ssd/score_ssd_coco.py

0 → 100644

此差异已折叠。

examples/ssd/score_ssd_pascal.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_coco.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_detect.cpp

0 → 100644

此差异已折叠。

examples/ssd/ssd_ilsvrc.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_orig.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_resnet.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_speed.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_video.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_webcam.py

0 → 100644

此差异已折叠。

examples/ssd/ssd_pascal_zf.py

0 → 100644

此差异已折叠。

examples/ssd_detect.ipynb

0 → 100644

此差异已折叠。

此差异已折叠。

examples/web_demo/app.py

0 → 100644

此差异已折叠。

examples/web_demo/exifutil.py

0 → 100644

此差异已折叠。

examples/web_demo/readme.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

include/caffe/blob.hpp

0 → 100644

此差异已折叠。

include/caffe/caffe.hpp

0 → 100644

此差异已折叠。

include/caffe/common.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

include/caffe/filler.hpp

0 → 100644

此差异已折叠。

include/caffe/internal_thread.hpp

0 → 100644

此差异已折叠。

include/caffe/layer.hpp

0 → 100644

此差异已折叠。

include/caffe/layer_factory.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

include/caffe/net.hpp

0 → 100644

此差异已折叠。

include/caffe/parallel.hpp

0 → 100644

此差异已折叠。

include/caffe/sgd_solvers.hpp

0 → 100644

此差异已折叠。

include/caffe/solver.hpp

0 → 100644

此差异已折叠。

include/caffe/solver_factory.hpp

0 → 100644

此差异已折叠。

include/caffe/syncedmem.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

include/caffe/util/bbox_util.hpp

0 → 100644

此差异已折叠。

include/caffe/util/benchmark.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

include/caffe/util/cudnn.hpp

0 → 100644

此差异已折叠。

include/caffe/util/db.hpp

0 → 100644

此差异已折叠。

include/caffe/util/db_leveldb.hpp

0 → 100644

此差异已折叠。

include/caffe/util/db_lmdb.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

include/caffe/util/format.hpp

0 → 100644

此差异已折叠。

include/caffe/util/gpu_util.cuh

0 → 100644

此差异已折叠。

include/caffe/util/hdf5.hpp

0 → 100644

此差异已折叠。

include/caffe/util/im2col.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

include/caffe/util/io.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

include/caffe/util/nccl.hpp

0 → 100644

此差异已折叠。

include/caffe/util/rng.hpp

0 → 100644

此差异已折叠。

include/caffe/util/sampler.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

matlab/+caffe/+test/test_io.m

0 → 100644

此差异已折叠。

matlab/+caffe/+test/test_net.m

0 → 100644

此差异已折叠。

matlab/+caffe/+test/test_solver.m

0 → 100644

此差异已折叠。

matlab/+caffe/Blob.m

0 → 100644

此差异已折叠。

matlab/+caffe/Layer.m

0 → 100644

此差异已折叠。

matlab/+caffe/Net.m

0 → 100644

此差异已折叠。

matlab/+caffe/Solver.m

0 → 100644

此差异已折叠。

matlab/+caffe/get_net.m

0 → 100644

此差异已折叠。

matlab/+caffe/get_solver.m

0 → 100644

此差异已折叠。

此差异已折叠。

matlab/+caffe/io.m

0 → 100644

此差异已折叠。

matlab/+caffe/private/CHECK.m

0 → 100644

此差异已折叠。

此差异已折叠。

matlab/+caffe/private/caffe_.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

matlab/+caffe/reset_all.m

0 → 100644

此差异已折叠。

matlab/+caffe/run_tests.m

0 → 100644

此差异已折叠。

matlab/+caffe/set_device.m

0 → 100644

此差异已折叠。

matlab/+caffe/set_mode_cpu.m

0 → 100644

此差异已折叠。

matlab/+caffe/set_mode_gpu.m

0 → 100644

此差异已折叠。

matlab/+caffe/version.m

0 → 100644

此差异已折叠。

matlab/CMakeLists.txt

0 → 100644

此差异已折叠。

matlab/demo/classification_demo.m

0 → 100644

此差异已折叠。

matlab/hdf5creation/.gitignore

0 → 100644

此差异已折叠。

matlab/hdf5creation/demo.m

0 → 100644

此差异已折叠。

matlab/hdf5creation/store2hdf5.m

0 → 100644

此差异已折叠。

python/CMakeLists.txt

0 → 100644

此差异已折叠。

python/action_metrics.py

0 → 100644

此差异已折叠。

python/caffe/__init__.py

0 → 100644

此差异已折叠。

python/caffe/_caffe.cpp

0 → 100644

此差异已折叠。

python/caffe/classifier.py

0 → 100644

此差异已折叠。

python/caffe/coord_map.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/caffe/detector.py

0 → 100644

此差异已折叠。

python/caffe/draw.py

0 → 100644

此差异已折叠。

此差异已折叠。

python/caffe/io.py

0 → 100644

此差异已折叠。

python/caffe/net_spec.py

0 → 100644

此差异已折叠。

python/caffe/pycaffe.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/caffe/test/test_io.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/caffe/test/test_nccl.py

0 → 100644

此差异已折叠。

python/caffe/test/test_net.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/caffe/test/test_solver.py

0 → 100644

此差异已折叠。

此差异已折叠。

python/classify.py

0 → 100644

此差异已折叠。

此差异已折叠。

python/detect.py

0 → 100644

此差异已折叠。

python/draw_net.py

0 → 100644

此差异已折叠。

python/rename_layers.py

0 → 100644

此差异已折叠。

python/requirements.txt

0 → 100644

此差异已折叠。

python/train.py

0 → 100644

此差异已折叠。

scripts/build_docs.sh

0 → 100644

此差异已折叠。

scripts/caffe

0 → 100644

此差异已折叠。

scripts/copy_notebook.py

0 → 100644

此差异已折叠。

scripts/cpp_lint.py

0 → 100644

此差异已折叠。

scripts/deploy_docs.sh

0 → 100644

此差异已折叠。

scripts/download_model_binary.py

0 → 100644

此差异已折叠。

此差异已折叠。

scripts/gather_examples.sh

0 → 100644

此差异已折叠。

scripts/split_caffe_proto.py

0 → 100644

此差异已折叠。

scripts/travis/build.sh

0 → 100644

此差异已折叠。

scripts/travis/configure-cmake.sh

0 → 100644

此差异已折叠。

scripts/travis/configure-make.sh

0 → 100644

此差异已折叠。

scripts/travis/configure.sh

0 → 100644

此差异已折叠。

scripts/travis/defaults.sh

0 → 100644

此差异已折叠。

scripts/travis/install-deps.sh

0 → 100644

此差异已折叠。

此差异已折叠。

scripts/travis/setup-venv.sh

0 → 100644

此差异已折叠。

scripts/travis/test.sh

0 → 100644

此差异已折叠。

scripts/upload_model_to_gist.sh

0 → 100644

此差异已折叠。

src/caffe/CMakeLists.txt

0 → 100644

此差异已折叠。

src/caffe/blob.cpp

0 → 100644

此差异已折叠。

src/caffe/common.cpp

0 → 100644

此差异已折叠。

src/caffe/data_transformer.cpp

0 → 100644

此差异已折叠。

src/caffe/internal_thread.cpp

0 → 100644

此差异已折叠。

src/caffe/layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layer_factory.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/absval_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/absval_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/argmax_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/bias_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/bias_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/bnll_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/bnll_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/concat_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/concat_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/conv_dw_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/conv_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/conv_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/crop_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/crop_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/data_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/deconv_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/deconv_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/dropout_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/eltwise_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/elu_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/elu_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/embed_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/embed_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/exp_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/exp_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/filter_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/filter_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/layers/grn_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/grn_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/im2col_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/im2col_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/input_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/log_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/log_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/loss_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/lrn_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/lrn_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/lstm_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/mvn_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/mvn_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/neuron_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/permute_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/layers/pooling_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/power_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/power_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/prelu_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/prelu_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/relu_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/relu_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/layers/rnn_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/scale_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/scale_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/sigmoid_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/layers/silence_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/slice_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/slice_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/softmax_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/layers/split_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/split_layer.cu

0 → 100644

此差异已折叠。

src/caffe/layers/spp_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/tanh_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/tanh_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/layers/tile_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/layers/tile_layer.cu

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/net.cpp

0 → 100644

此差异已折叠。

src/caffe/parallel.cpp

0 → 100644

此差异已折叠。

src/caffe/proto/caffe.proto

0 → 100644

此差异已折叠。

src/caffe/solver.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/solvers/adam_solver.cpp

0 → 100644

此差异已折叠。

src/caffe/solvers/adam_solver.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/solvers/sgd_solver.cpp

0 → 100644

此差异已折叠。

src/caffe/solvers/sgd_solver.cu

0 → 100644

此差异已折叠。

src/caffe/syncedmem.cpp

0 → 100644

此差异已折叠。

src/caffe/test/CMakeLists.txt

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_bbox_util.cpp

0 → 100644

此差异已折叠。

src/caffe/test/test_benchmark.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/test/test_blob.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/test/test_common.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_db.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_filler.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_grn_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_io.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_lrn_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_mvn_layer.cpp

0 → 100644

此差异已折叠。

src/caffe/test/test_net.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_platform.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_protobuf.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_rnn_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_solver.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_spp_layer.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

src/caffe/test/test_syncedmem.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/caffe/test/test_util_blas.cpp

0 → 100644

此差异已折叠。

src/caffe/util/bbox_util.cpp

0 → 100644

此差异已折叠。

src/caffe/util/bbox_util.cu

0 → 100644

此差异已折叠。

src/caffe/util/benchmark.cpp

0 → 100644

此差异已折叠。

src/caffe/util/blocking_queue.cpp

0 → 100644

此差异已折叠。

src/caffe/util/cudnn.cpp

0 → 100644

此差异已折叠。

src/caffe/util/db.cpp

0 → 100644

此差异已折叠。

src/caffe/util/db_leveldb.cpp

0 → 100644

此差异已折叠。

src/caffe/util/db_lmdb.cpp

0 → 100644

此差异已折叠。

src/caffe/util/hdf5.cpp

0 → 100644

此差异已折叠。

src/caffe/util/im2col.cpp

0 → 100644

此差异已折叠。

src/caffe/util/im2col.cu

0 → 100644

此差异已折叠。

src/caffe/util/im_transforms.cpp

0 → 100644

此差异已折叠。

src/caffe/util/insert_splits.cpp

0 → 100644

此差异已折叠。

src/caffe/util/io.cpp

0 → 100644

此差异已折叠。

src/caffe/util/math_functions.cpp

0 → 100644

此差异已折叠。

src/caffe/util/math_functions.cu

0 → 100644

此差异已折叠。

src/caffe/util/sampler.cpp

0 → 100644

此差异已折叠。

src/caffe/util/signal_handler.cpp

0 → 100644

此差异已折叠。

src/caffe/util/upgrade_proto.cpp

0 → 100644

此差异已折叠。

src/gtest/CMakeLists.txt

0 → 100644

此差异已折叠。

src/gtest/gtest-all.cpp

0 → 100644

此差异已折叠。

src/gtest/gtest.h

0 → 100644

此差异已折叠。

src/gtest/gtest_main.cc

0 → 100644

此差异已折叠。

tools/CMakeLists.txt

0 → 100644

此差异已折叠。

tools/caffe.cpp

0 → 100644

此差异已折叠。

tools/compute_image_mean.cpp

0 → 100644

此差异已折叠。

tools/convert_imageset.cpp

0 → 100644

此差异已折叠。

tools/device_query.cpp

0 → 100644

此差异已折叠。

tools/extra/extract_seconds.py

0 → 100644

此差异已折叠。

此差异已折叠。

tools/extra/parse_log.py

0 → 100644

此差异已折叠。

tools/extra/parse_log.sh

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

tools/extra/summarize.py

0 → 100644

此差异已折叠。

tools/extract_features.cpp

0 → 100644

此差异已折叠。

tools/finetune_net.cpp

0 → 100644

此差异已折叠。

tools/net_speed_benchmark.cpp

0 → 100644

此差异已折叠。

tools/test_net.cpp

0 → 100644

此差异已折叠。

tools/train_net.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

tools/upgrade_net_proto_text.cpp

0 → 100644

此差异已折叠。

此差异已折叠。