1.add compare result, 2.add seed for paddlegan (#493)

* 1.add compare result, 2.add seed for paddlegan * 1.add compare result, 2.add seed for paddlegan * 1.add compare result, 2.add seed for paddlegan * 1.add compare result, 2.add seed for paddlegan

Showing

test_tipc/compare_results.py

0 → 100644

test_tipc/docs/compare_right.png

0 → 100644

50.9 KB

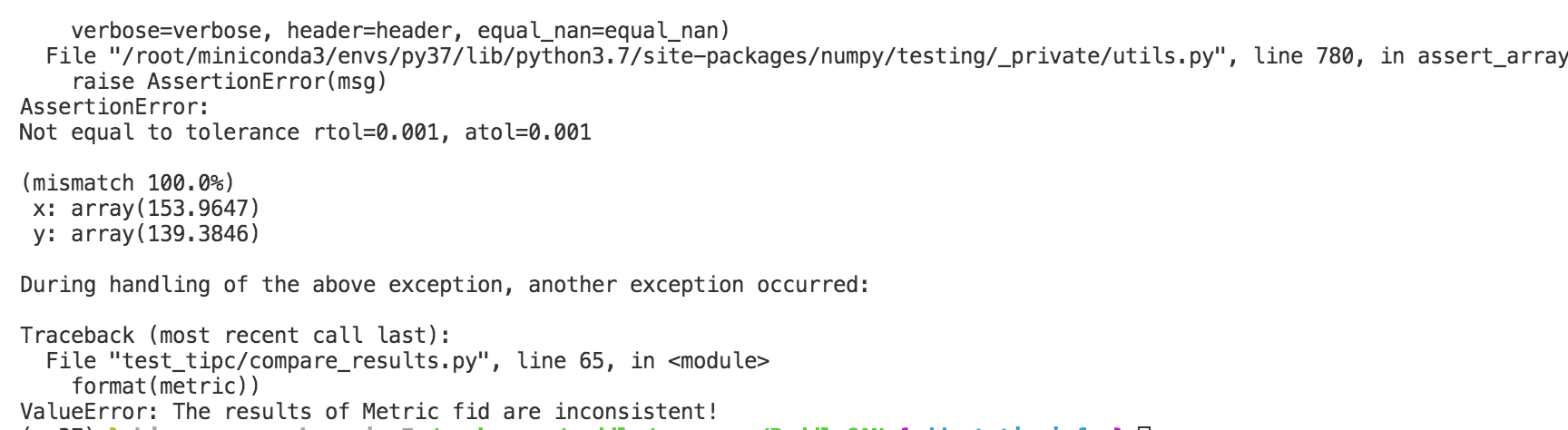

test_tipc/docs/compare_wrong.png

0 → 100644

93.8 KB