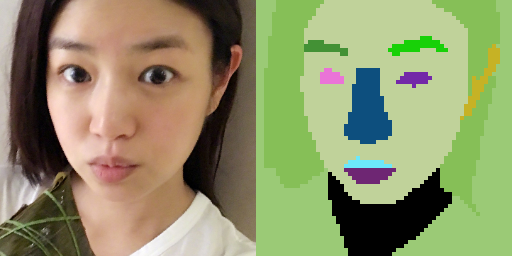

add face_parse predictor in apps (#78)

* add face_parse predictor in apps

Showing

applications/tools/face_parse.py

0 → 100644

docs/imgs/face.png

0 → 100644

200.4 KB

docs/imgs/face_parse_out.png

0 → 100644

122.6 KB