add pix2pix and cyclegan docs (#45)

* add docs * update

Showing

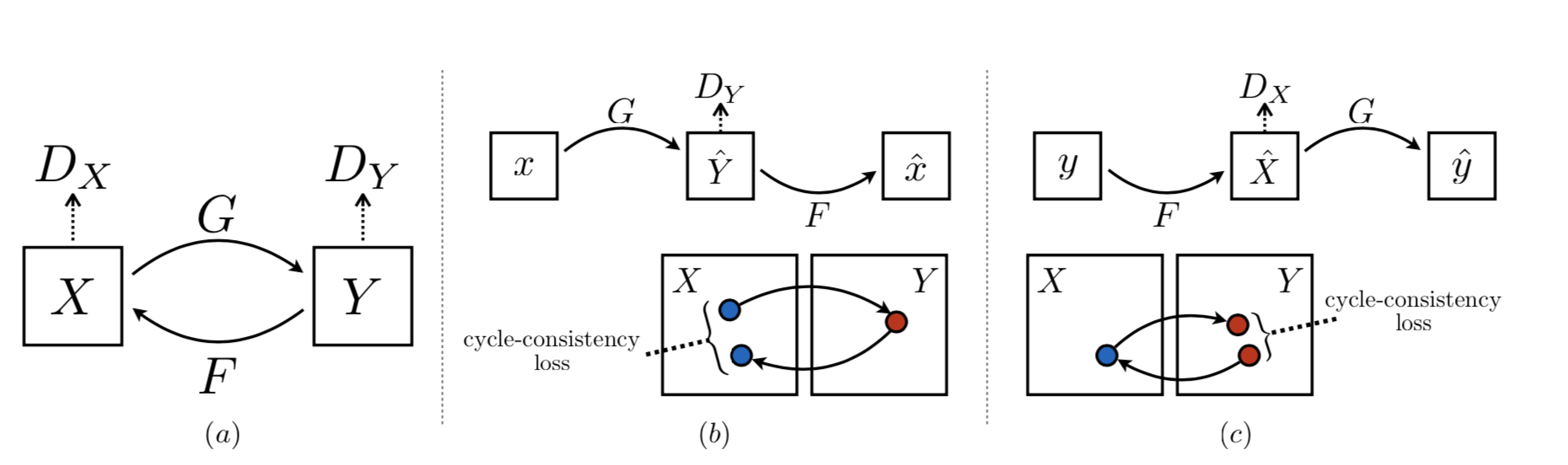

docs/imgs/cyclegan.png

0 → 100644

138.7 KB

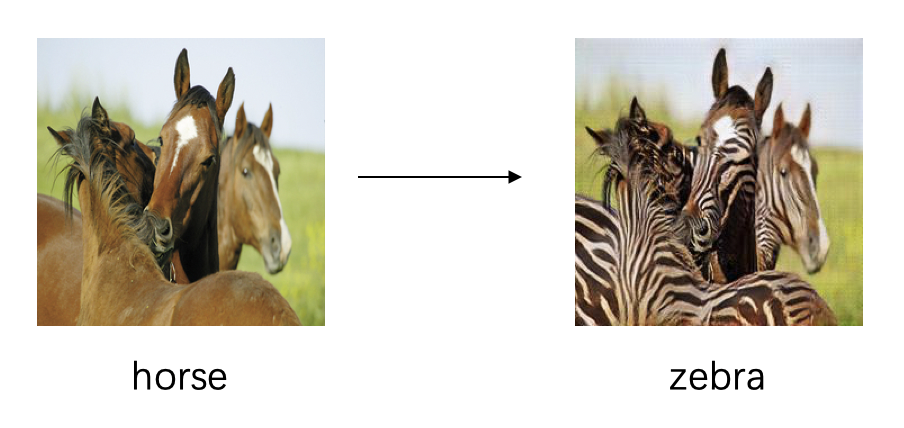

docs/imgs/horse2zebra.png

0 → 100644

415.4 KB

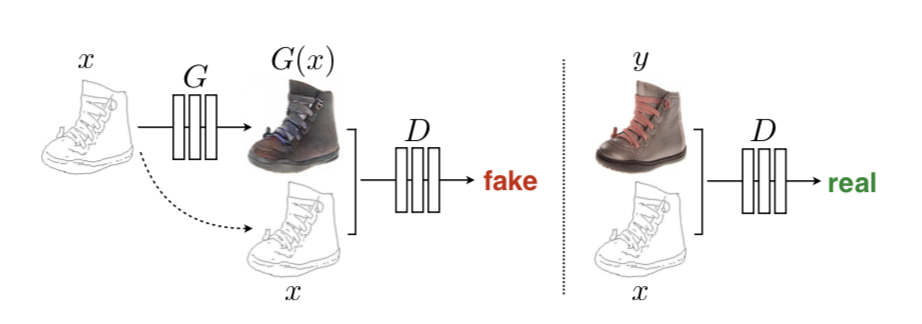

docs/imgs/pix2pix.png

0 → 100644

89.6 KB