Switch TensorBoard to VisualDL 2.0 (#242)

* add_vdl * Update docs for visualdl.

Showing

811.2 KB

124.1 KB

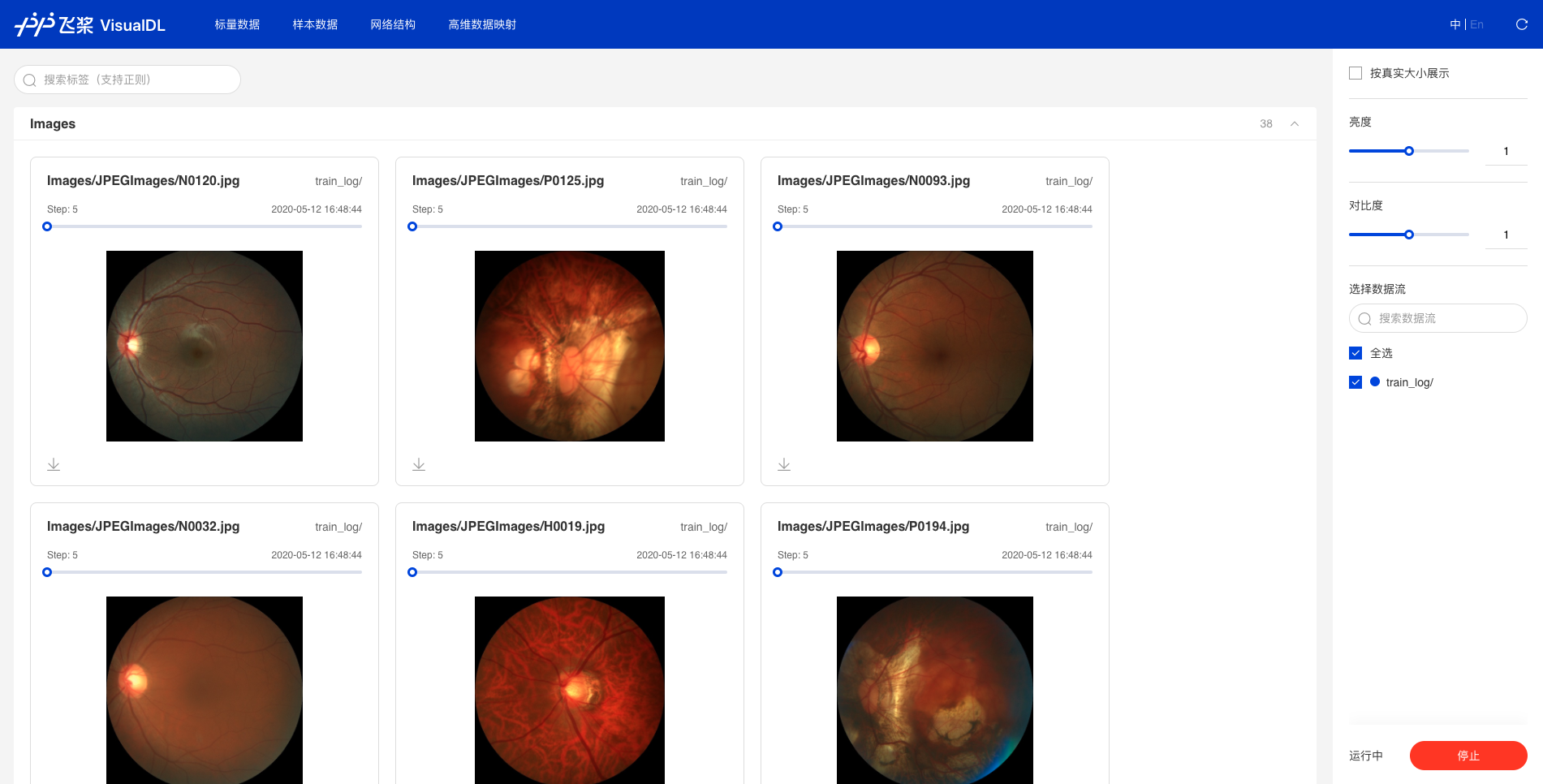

docs/imgs/visualdl_image.png

0 → 100644

596.1 KB

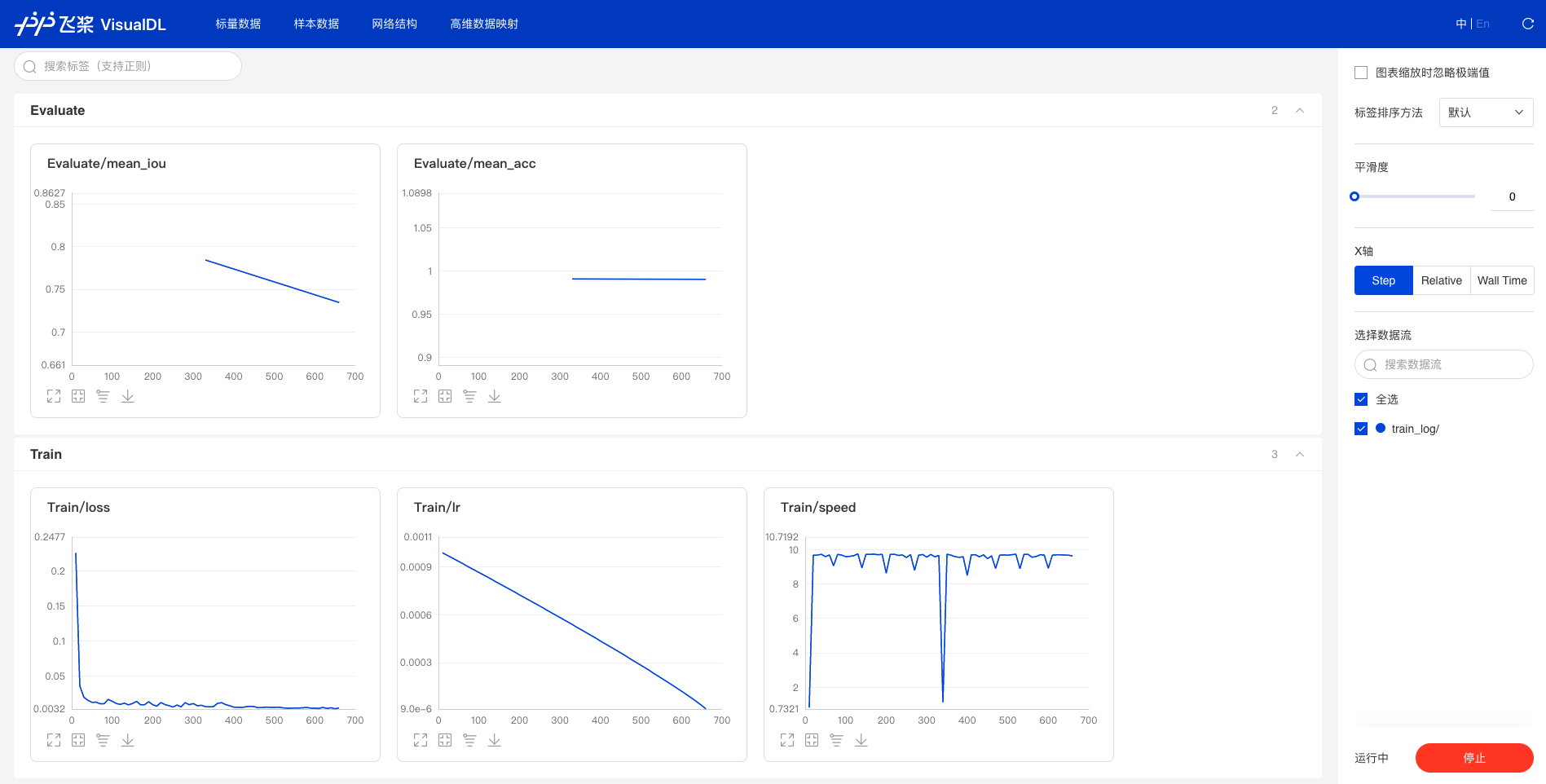

docs/imgs/visualdl_scalar.png

0 → 100644

128.9 KB

* add_vdl * Update docs for visualdl.

811.2 KB

124.1 KB

596.1 KB

128.9 KB